Key findings

- 63% do not use multifactor authentication for cloud access

- 50% do not rotate access keys periodically

- 92% do not log access to cloud storage, eliminating the ability to conduct forensic analysis of an incident

- 26% of workloads expose SSH ports to the internet and 20% expose RDP

Public cloud has made possible previously unheard of scale, performance, and agility for enterprises of all sizes. Development teams are getting new products and applications to production faster than ever before, accelerating digital transformation within their organizations. But cloud adoption hasn’t been without its speed bumps, not the least of which is security. Cloud vendors have dedicated enormous security resources to their platforms, yet barely a day goes by without news of another cloud security incident. Most of these incidents can be traced back to insecure use of cloud services rather than to security flaws in the services themselves.

To take a look at the current state of public cloud security, the Zscaler ThreatLabZ team collected anonymous statistics from customers running hundreds of thousands of workloads in AWS, Azure, and Google Cloud Platform (GCP). We also sampled user and application settings from customers using Microsoft 365 (M365).

In this post, we’ll talk about the findings at a high level. Future posts will dive deeper into cloud-based attacks observed by the ThreatLabZ team, the risk of certain types of cloud misconfigurations, and the appropriate mitigations to put into place to protect against security incidents.

Cloud security shared responsibility model

Cloud security and compliance is a shared responsibility between the cloud service provider (CSP) and the customer. This has been very well advertised by all the CSPs where the security “of” the cloud service will be provided by the CSPs and the security “in” the cloud service is the responsibility of the customer.

The split of responsibilities varies based on the type of cloud service being used. In a SaaS application, such as M365 or Salesforce, the cloud vendor is responsible for the entirety of the application’s security, from the physical security through the operating system(s) and the application itself.

In an IaaS platform deployment, however, the customer is responsible for quite a bit more of the security and configuration of the services. Often, this includes the application code and even the operating system.

In all cases, it is the enterprise’s responsibility to ensure that its data is properly protected, whether it lives in an enterprise data center or in a public cloud environment.

Cloud security findings

Upon reviewing the data, we found that a broad range of widely reported security issues are still not adequately mitigated in most environments. Key areas of deficiencies include:

- Lack of logging and monitoring

If there is a compromise or other security incident, the first place to look for information on the event is log files. Even without a security failure, robust logging can help you fully understand what’s going on in your cloud environment. CSP tools, such as AWS CloudTrail and Azure Monitor, can help ensure that you have this information when needed. But they only work when enabled. Our analysis found that nearly 20 percent of implementations did not have CloudTrail enabled, and more than half did not take steps to maintain their logging beyond the default 90 days.

- Excessive permissions

Compromised credentials are to blame for the vast majority of breaches, so it’s no surprise that cloud access keys and credentials are a primary target for bad actors. Regardless of the strength of your security, an attacker with the right credentials can walk right through the front door. Notable examples include Uber, where the personally identifiable information (PII) of 57 million users was leaked when attackers nabbed hardcoded AWS credentials from a GitHub repo, and Code Spaces, whose entire company assets were wiped out from AWS after a phishing incident. In our analysis, a high percentage of organizations neglected to use multifactor authentication and used hard-coded access keys that persist for far too long before they are rotated.

- Storage and encryption

Publicly exposed cloud storage buckets have been the cause of a number of high-profile data exposures over the past several years. The L.A. Times, Tesla, the Republican Party, Verizon, and Dow Jones are but a few of the well-known organizations that have made this mistake. Despite the press coverage, cloud storage remains the most common area of cloud misconfiguration. Loose access policies, lack of encryption, policies that aren’t uniformly applied, and accessibility via unencrypted protocols are but a few of the most common issues.

- Network security groups

Network security groups control the network connectivity of every service in a cloud deployment, acting like a network firewall. Unfortunately, this group represents the second-most widely observed area of misconfiguration after cloud storage. In some cases, these are the result of human error. In other cases, security groups are intentionally left open to facilitate connectivity or to avoid complexity. Externally exposed protocols such as Secure Shell (SSH) and Remote Desktop Protocol (RDP) are far too common and give attackers the ability to take over infected systems and move laterally within an organization’s cloud footprint.

Lack of logging and monitoring

In a typical cloud environment, gigabytes (GBs) of data are moving in and out all the time. To fully understand what’s going on in your cloud environment you’ll need a robust logging and monitoring system in place.

For example, AWS CloudTrail is a logging service that gathers information about API calls, actions and changes within your AWS environment. CloudTrail logs contain critical information for audits and intrusion response. CloudTrail is enabled by default, and it logs all activities and events for 90 days. If you need more than 90 days, you’ll have to configure CloudTrail to deliver those events to an Amazon S3 bucket.

AWS CloudWatch collects and tracks metrics, monitors log files, and deploys automated responses to common events in your environment.

Impact

In case of a compromise, logs are often the first source of information. They are a crucial part of incident response.

Prevention

- Ensure CloudTrail/Azure Monitor is enabled (for master and provisioned accounts)

- Persist logs to S3 buckets/Azure Storage and configure lifecycle management

- Ensure S3 server-side encryption (at a minimum)

Statistics

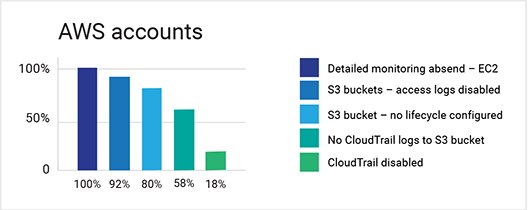

- Access logs were not enabled for 92 percent of S3 buckets

- 99 percent did not require server-side and in-transit encryption

- 18 percent had CloudTrail disabled

- 58 percent did not persist CloudTrail logs to S3

- 78 percent of S3 buckets did not have a lifecycle configuration

- 100 percent of EC2 instances did not have detailed monitoring enabled

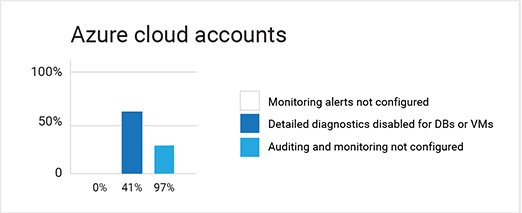

- No accounts had Azure Monitor alerts configured

- Detailed diagnostics were not enabled for 89 percent of SQL databases or VMs on Azure

Excessive permissions

Impact

Access keys and credentials are usually the first target for adversaries. With these in hand, it doesn’t matter which security policies or firewall rules are in place — the attacker has access to the entire cloud account.

Examples

- Code Spaces was compromised in 2014 when its console credentials were phished. The adversaries wiped most of the company’s assets on AWS.1

- PII of 57 million users were leaked from Uber in 2016 when attackers got access to hardcoded AWS credentials from a GitHub repository.2

- Records belonging to 35 million customers of Malindo Air were leaked by former employees of a vendor who abused their access.3

Prevention

Multifactor Authentication (MFA)

- Passwords are the predominant method for authentication to computing systems these days. It is important to choose a unique non-guessable password for each system that is being used. It is impossible for users to generate and memorize such passwords for hundreds of sites they use. The use of a second factor of authentication becomes all the more important.

- In our analysis of customer environments, we identified that a vast majority of the customers did not make use of either hardware or software based MFA.

Key rotation

- Access keys in practice are the same as usernames and passwords, but used programmatically.

- Users do not treat these with the same precautions as for passwords. They end up hard-coded in code, saved in plain text, and more.

- To limit the exposure of keys, it is necessary to rotate them periodically.

- Also, unused keys must be revoked.

Principle of least privilege

- Do not use the “root” user. Create users with the specific privileges they’d need.

- Assign policies to groups, not users, to ensure consistency.

- Use roles (IAM roles, Azure RBAC) instead of long-term access keys.

Statistics

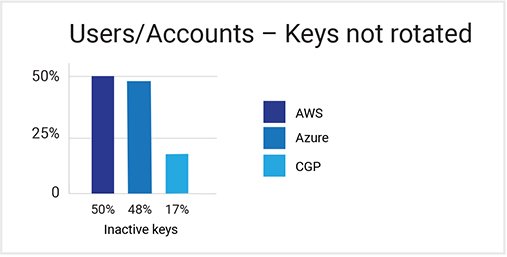

- Access keys were not rotated periodically in 50 percent of environments, resulting in exposed keys being usable for long periods of time.

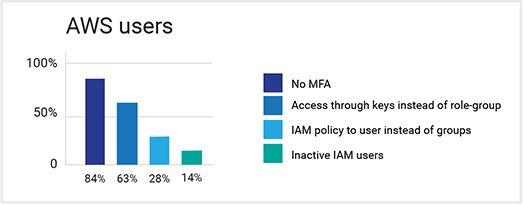

- 63 percent of AWS console IAM users didn’t use MFA.

- In AWS accounts, 28 percent of access was through keys instead of roles or groups. (Roles ensure uniformity in access and the principle of least authority.)

- 84 percent assigned IAM policies to users instead of groups.

- 14 percent of IAM users were inactive.

Storage and encryption

What

The most common misconfigurations still revolve around cloud storage buckets and the objects within, which pose a big confidentiality risk and make them the number-one target for data breaches. These misconfigurations encompass several commonly observed mistakes while initializing and operationalizing the storage buckets and the contents within them, such as:

- Loose storage bucket access policies

- Access policies not applied uniformly to all users

- Contents within the storage bucket not being encrypted

- Accessing contents from storage buckets over unsecured channels

- Backup storage and objects within them not being encrypted

Impact

These misconfigurations can lead to unauthorized users getting access to the storage buckets with the potential to:

- Download and expose proprietary data or sensitive data that are otherwise meant to be kept confidential

- Upload malicious programs/files including malware/ransomware

- Modify existing contents

- Destroy backup storage buckets

Examples

- In 2018, the misconfigured storage bucket of L.A. Times was open to the internet, which eventually led to a massive cryptojacking attack.

- Around the same time, Tesla’s cloud account was breached by hackers who used the account for malicious activities such as cryptomining. The hackers were able to break into Tesla’s cloud account because the account wasn’t password-protected.

- Earlier this year, Twilio, the cloud communications platform-as-as-service company, reported an incident in which the misconfigured S3 bucket allowed bad actors to get into and modify the TaskRouter JavaScript SDK.

Prevention

Apply a stringent, uniform access policy

- The access policies applied to the storage buckets and the contents within them need to be stringent and uniform across all users. This will, if not eradicate completely, certainly minimize the instances of cloud storage getting exposed to the internet.

- Instead of tying the access policies to a user, a role-based access policy will enforce uniform access policies across the users.

Encrypt the contents within storage buckets

- Encrypt the contents within storage buckets using the strongest ciphers so that in case of a data breach, it will be difficult for attackers to get the actual contents. This will help organizations minimize the damage if an incident occurs.

Access contents from the storage buckets over encrypted channels

- Access the storage buckets and the contents of the storage over a secured channel by enabling SSL/TLS protocols rather than using a plain HTTP protocol.

Secure back-up storage buckets and the contents within them

- It is important to provide strict uniform access policies and encryptions to the backup storage and the backed-up data within them.

Frequent audits of access policy and automation

- It is recommended that organizations have a stringent audit process and perform frequent audits of storage bucket configuration settings and access policies.

- Sophisticated automation is essential for applying the best security practices uniformly across all users and to quickly detect any misconfigurations.

Robust alerting

- It is very important to have a robust alerting mechanism in place to promptly notify cloud admins and users about misconfigurations.

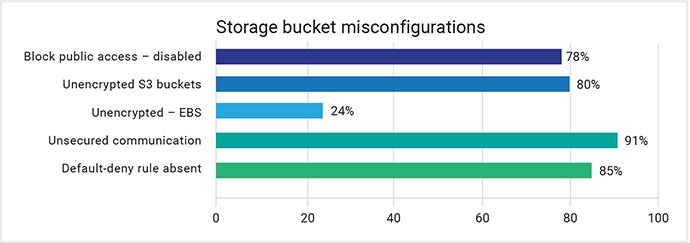

Statistics

From the internal statistics collected by its CSPM organization, Zscaler observed that:

- About 78 percent of user accounts had the “Block Public Access” option disabled which poses much bigger risk of the storage buckets owned by these users getting exposed to the internet.

- Approximately 80 percent of accounts didn’t have disk encryption enabled and approximately 24 percent didn’t have encrypted Elastic Block Storage (EBS).

- Surprisingly, 91 percent of storage accounts were using non-secured communication channels while accessing data. While most of the unsecured communications channels were found when other modules were trying to access the contents from these buckets, most of the accounts had the SSL/TLS option enabled for content access from the internet.

- Some accounts still used HTTP instead of HTTPS while accessing objects remotely from the internet, which is more than enough for attackers to get access to the storage buckets and abuse them for malicious activities.

- Most Azure accounts had the storage buckets encrypted. This is probably the result of Azure’s default option of enforcing encryption to storage buckets. A small step like this can help to ensure uniform enforcement of security policies.

- About 85 percent of Azure accounts didn’t have a default network access rule set to deny. This can possibly expose the network to the internet and bad actors.

Network security groups

A network security group is like a network firewall to protect cloud workloads from the internet. The network security group controls the traffic coming in and going out to the cloud-based servers/systems based on the rules enforced.

Misconfigurations in network security groups are the second most widely observed misconfigurations after misconfigurations in storage buckets.

These misconfigurations can be the results of unintentional human error. At times, IT admins and/or users open the rules in the network security group for specific purposes such as debugging or allowing legitimate network operations remotely. But, when finished, they sometimes forget to revert back to the more stringent rules in the network security group/policy which hackers can leverage to penetrate into the cloud-based systems.

Cloud users often have a tendency to enforce the default policy, which is sometimes insufficient for adequate security.

Impact

- Improper rules configured to protect cloud-based systems can allow bad actors to probe into the network and identify the servers and services running on them that are open to the internet by performing reconnaissance attacks.

- Attackers can go further beyond the reconnaissance attempt and conduct denial-of-service (DoS) or distributed-denial-of-service (DDoS) attacks by sending too many ICMP packets continuously (known as ICMP flood or ping flood) to a cloud-based server and over-utilize server resources and/or choke the internet pipe.

- Misconfigured network security groups allow attackers to abuse the exposed services and ports to make their way into the cloud-based systems through a brute-force attack or by exploiting known vulnerabilities. By opening up multiple concurrent connections, attackers can also conduct DoS attacks and bring systems down.

- Once bad actors get in, they can perform any illegitimate activities, such as stealing or making sensitive data publicly available, implanting malware or ransomware, and moving laterally to other systems.

Examples

- Last year, a sophisticated P2P botnet, named FritzsFrog, was discovered to have been actively abusing the SSH service for many months and was believed to have infected hundreds of servers.

- Earlier this year, Sophos identified a Cloud Snooper attack, which bypassed all security measures. According to the results of their investigation, the attacker is believed to have penetrated through open-to-internet SSH service by the brute-force technique.

- Also this year, new Chinese Linux malware targeting IoTs and servers, Kaiji, is believed to be using a similar SSH brute-force technique to penetrate and spread itself.

Prevention

Zscaler highly recommends implementing a zero trust network access (ZTNA) architecture to safeguard all your applications and only allow authorized users to access these applications. If done right using solutions like Zscaler Private Access, you can completely eliminate the external attack surface by blocking all inbound communication and preventing lateral propagation from an infected system. For organizations that are still in the process of implementing ZTNA, here are some short-term best practices when creating network security group/policy rules and applying them to cloud resources to minimize the risk of becoming easy targets for the attackers.

Block inbound traffic to certain services and database servers from the internet

- It is critical to block incoming traffic to services such as SSH and RDP by blocking inbound sessions from the internet to TCP ports 22 and TCP port 3389, respectively. Exposing database services to the internet can have dangerous repercussions, so incoming traffic from the internet to database services must be blocked.

- If these services are running on other non-standard ports, block those ports explicitly. It is false to assume that they are now hidden from attackers because the services are running on non-standard ports. It is not that difficult for hackers to find these services even if they may be running on non-standard ports.

- If it is necessary to open up those services for legitimate network operations or remote debugging, they must be restricted to a specific set of IP addresses and not from anywhere from the internet

Restrict outbound traffic

- Don’t ignore the outbound filters / rules and set them as stringent as possible. It is strongly advised to restrict the outbound server traffic to only those ports and those IP addresses that are necessary for the services to reach out for legitimate operations. These restrictions will help to reduce the lateral spread of infection or data exfiltration in case a system is compromised, thereby minimizing the damage.

Block reconnaissance attempts

- Since ICMP ping is a very handy tool to test network connectivity, it is often used to discover systems. Block inbound and outbound ICMP traffic to make it harder for bad actors to know where the servers are.

- Block port scanning and IP scanning attempts. The scanning is mostly done in the initial phase, where attackers try to identify the systems and services that they can target.

Network segmentation

- Network segmentation designed with security in mind is absolutely critical because it is instrumental in limiting data breaches and reducing damages.

- Deploying Network Detection and Response to monitor traffic in real time to identify and mitigate threats quickly.

Apply security patches promptly and always run the latest versions

- It is imperative to apply the latest available security patches for the applications and services running on cloud-based systems.

- Running older versions of software makes systems more vulnerable to exploitation and can eventually lead to a severe incident.

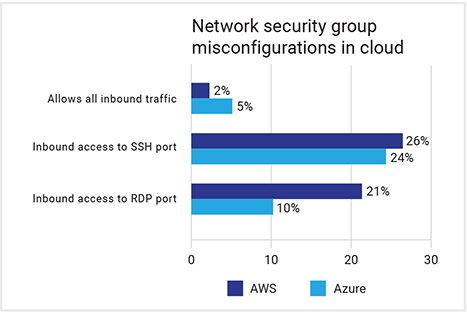

Statistics

- Though the percentage of resources that were completely open to the internet was as low as 5 percent, this is still too high. Attackers can, and do, leverage these misconfigurations to get full access to an organization’s cloud environment.

- Zscaler found 26 percent of servers still exposing their SSH ports out to the internet and about 20 percent of servers with RDP exposed. These numbers remain very high and heighten the risk of cloud-based resources becoming compromised.

Conclusion

The use of public clouds is growing and so are the attacks targeting them. But that doesn’t mean public clouds are risky and organizations should stay away from them. The ThreatLabZ research showed that the cause of most successful cyberattacks on public cloud instances is due to security misconfigurations rather than vulnerabilities in these infrastructures. Organizations can take simple steps to ensure that their configurations are secure and their data is protected.