By now, you are familiar with ChatGPT given how quickly the tool has grown. In fact, it became one of the fastest-growing consumer applications the world had seen just two months after its release.

Due to the attention it has drawn, every organization has drawn a different conclusion with respect to the use of large language models in advanced chatbots–both the quotidian use of the technology at work and even the larger societal questions they raise.

In a recent post, we explored how to both limit the use of AI tools and safely adopt them: “Make generative AI tools like ChatGPT safe and secure with Zscaler.”

For many organizations, these tools have generated considerable interest as they look to harness them to spark productivity. It has also drawn the attention of the authorities, given how it collects and produces data. Apart from data compliance questions that are being actively debated, something that is not up for debate is protecting corporate intellectual property or sensitive customer data from being used in ChatGPT or other AI projects.

Like we mentioned in our previous post, we have identified hundreds of AI tools and sites, including OpenAI ChatGPT, and we created a URL category called ‘AI and ML Applications.’ Organizations can use this URL category to take a few different actions: block access outright to these sites, extend caution-based access, in which users are coached on the use of these tools, or allowing use—but with guardrails—though browser isolation, in order to protect data.

We are going to look further into how isolation will thread the needle on sparking productivity while maintaining data security.

First, it is important to understand why data security matters so much. The Economist Korea has detailed a ChatGPT data security cautionary tale. In April, Samsung witnessed three separate leaks of sensitive company data with ChatGPT in their semiconductor division. One instance involved using the tool to check confidential source code, another employee requested “code optimization,” and a third uploaded a meeting recording to generate a transcript. That information is now part of ChatGPT. Samsung, for its part, has limited the use of the tool and may ban it or even build an internal version.

“Can I allow my users to play and experiment with ChatGPT without risking sensitive data loss?”

At Zscaler, we hear from organizations on both sides of the ChatGPT coin, which is why we offer various actions with respect to accessing these sites. Isolation can help in the following ways.

Balancing data security with innovation

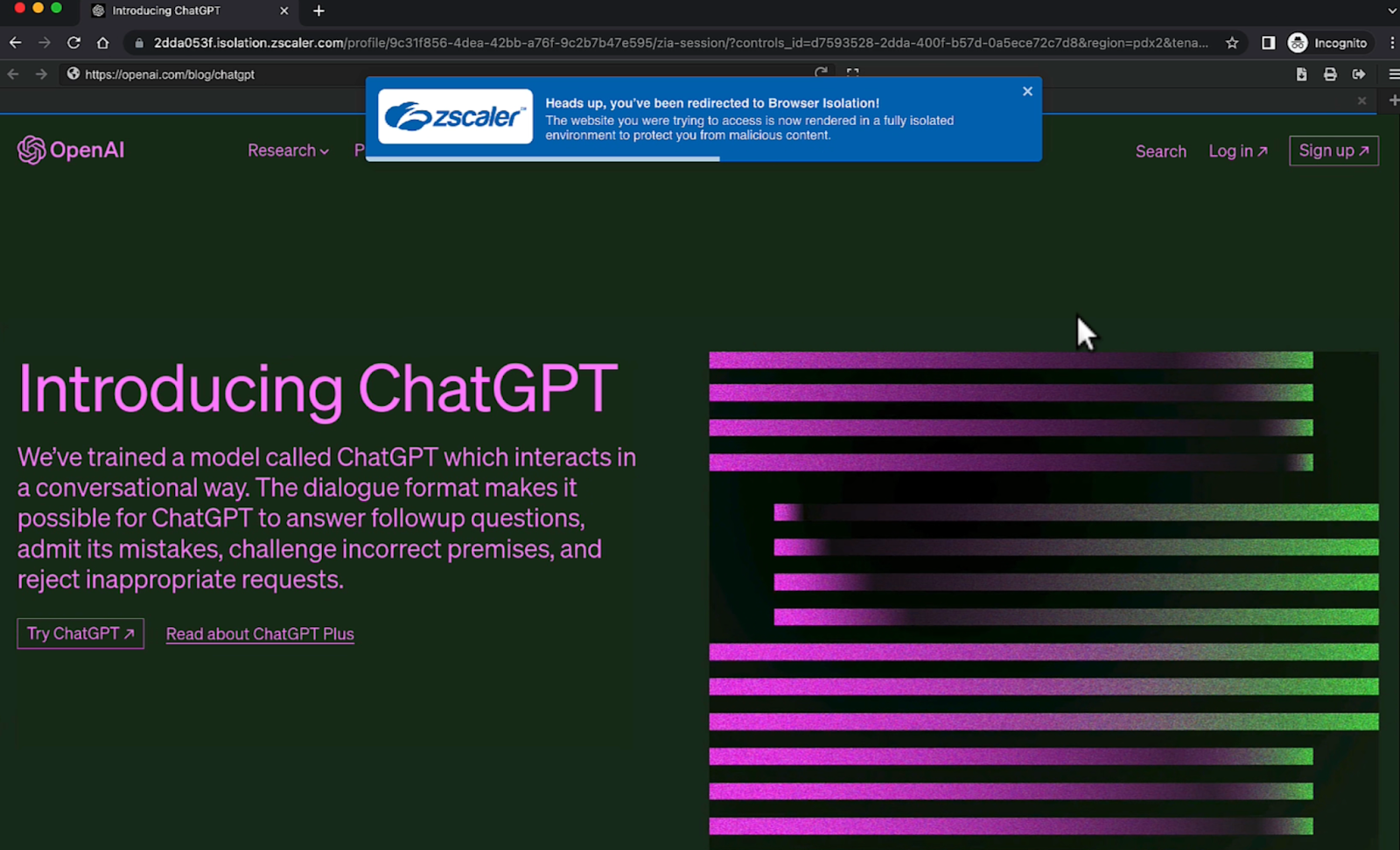

Leveraging the Zscaler Zero Trust Exchange, those large language model applications or AI internet destinations in the ‘AI and ML Applications’ URL category can be rendered in Zscaler Browser Isolation. The benefit? Powerful controls over how sensitive data is handled, both files and specific pieces of sensitive information.

To begin with, you could block all uploads and downloads (on the AI sites that allow them) and restrict clipboard access outright but, given the desire to allow some use, allow for text inputs. This way, users can still type in queries and play around with any generated insights.

What about risks associated with users sharing sensitive data in their chat queries? With Browser Isolation, any sensitive data typed in can be detected and protected from being sent by the Zscaler DLP engine. What is more, for full visibility into how employees are using these sites, we can detect and log the queries employees are posing to a site like ChatGPT, even if DLP rules are not activated.

Figure 1. DLP workflow to protect data sharing in browser isolation

Let’s say, for instance, a user attempts to upload a lot of text, including sensitive data, in a prompt. Within the confines of Browser Isolation, which is fired up as an ephemeral container, the users can input data but no sensitive data will be allowed to leave the company - it will be blocked in the browser isolation session.

What about avoiding intellectual property issues by ensuring no data can be downloaded onto a user’s computer? This can be avoided by blocking downloads in isolation or by using temporary “protected storage” without any such data going to the actual endpoint or violating any endpoint upload/download policy. Similarly, any output productivity files, such as .docx, .xlsx, etc., can be viewed as read-only PDFs within that protected storage without those necessarily being downloaded to the endpoint itself.

Moreover, even if uploads are allowed—to AI tools or any other site—the DLP engine can detect sensitive content in the files and even leverage Optical Character Recognition to find sensitive data in the image files.

Ultimately, organizations will also want full tracking of how employees are using these sites in Isolation. This is where the DLP Incident Receiver comes into play, capturing and logging any requests to AI applications for review by security or audit teams.

But what about organizations who are not yet ready to allow use of AI applications? As we outlined in our previous blog, companies can simply block access to the “AI and ML applications” URL category.

So, the Zscaler Zero Trust Exchange provides complete protection while enabling productivity for your users and preventing shadow IT usage.

See our protections in action in this short demo:

To learn more about how to empower teams to safely use ChatGPT, contact your account team, sign up for a demo of Zscaler for Users, and read our other blog on the topic.