In the last few weeks, I’ve interacted with over 50 CISOs and security practitioners during the RSA conference and elsewhere on the US East Coast. ChatGPT and other generative AI were top of the mind for many executives from across financials, manufacturing, IT services, and other verticals. Here are the top questions I received:

- What controls does Zscaler provide to block ChatGPT?

- How can I safely enable ChatGPT for my employees instead of outright blocking it?

- What are other organizations doing to control how their employees use ChatGPT and generative AI?

- How do I prevent data leaks on ChatGPT and avoid the situation Samsung recently found itself in?

- What is Zscaler doing to harness the positive use cases of AI/ML tools such as ChatGPT?

ChatGPT usage has gained momentum in the consumer and enterprise space during the last six months, but other AI tools, like Copymatic, AI21, etc., are also on the uptick. Many of our larger enterprise customers, especially in the European Union and those in the financial industry worldwide, have been working on formulating a policy framework to adopt such tools safely.

Strike a balance with Intelligent access controls

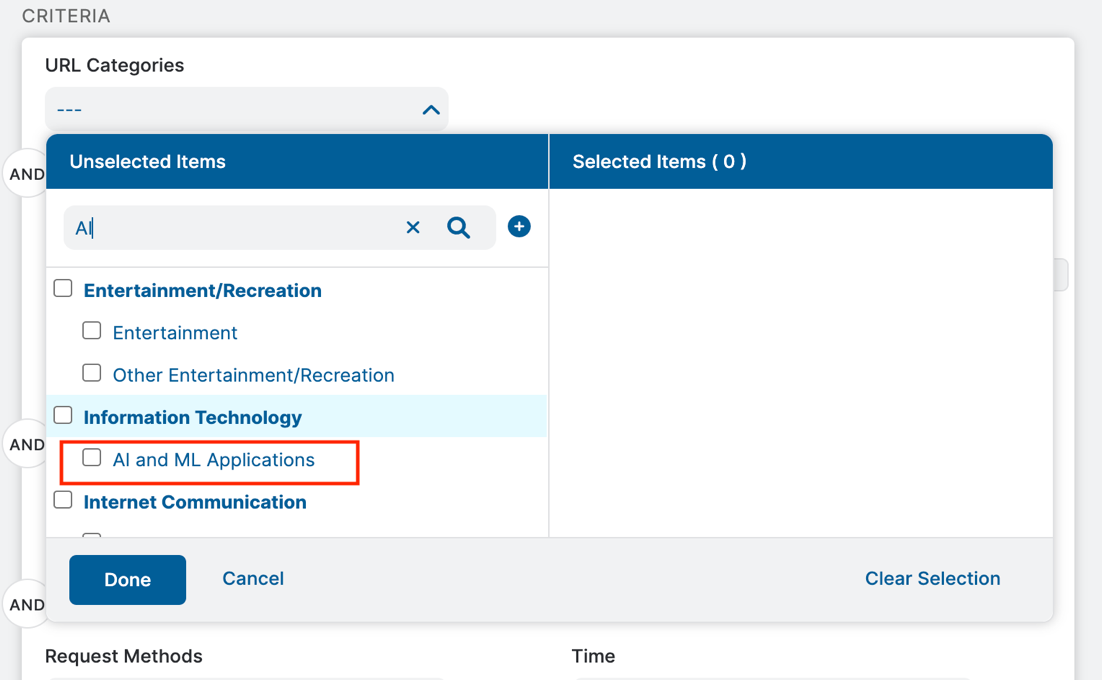

Zscaler has identified hundreds of such tools and sites, including OpenAI ChatGPT, and we have created a URL category called ‘AI and ML Applications’ through which our customers can take the following action on a wide variety of generative AI and ML tools, including:

- Block access (popular control within Financials and regulated industry)

- Caution-based access (Coach users with risk of using such generative tools)

- Isolate access (access is granted through browser isolation only, but any output from such tools cannot be downloaded to prevent IP right/copyright issues)

Fine-grained DLP controls on ChatGPT

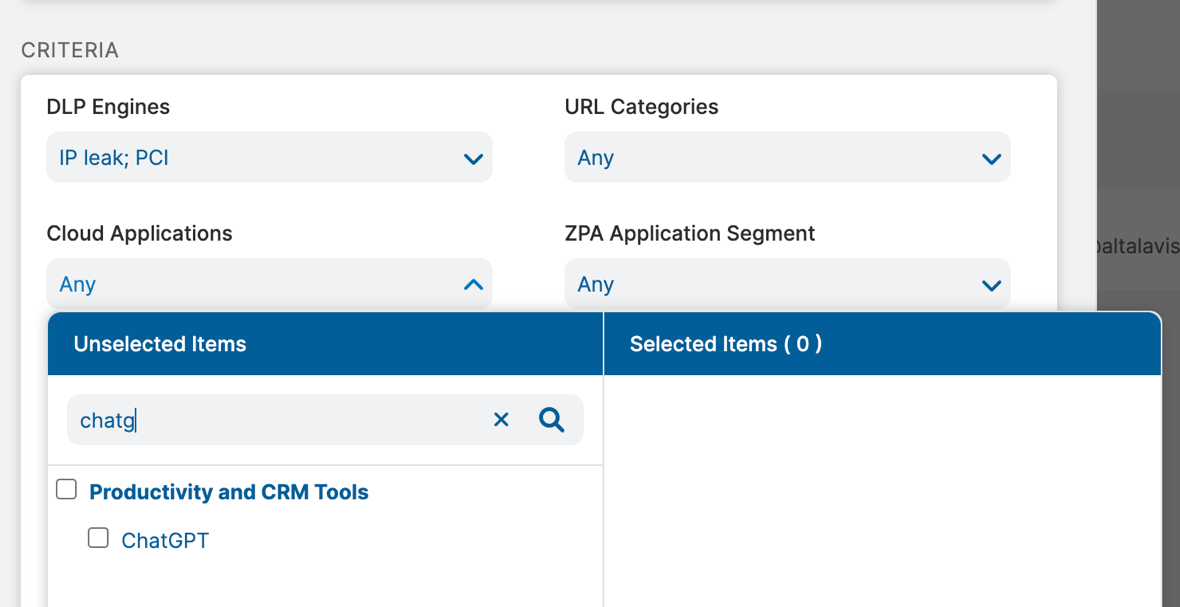

Since ChatGPT is the most popular AI application, we created a pre-defined ChatGPT Cloud Application to provide more preventive controls around it. For many organizations that do not want to block access to ChatGPT but are worried about data leakage and are concerned about giving up IP rights on content uploaded to ChatGPT, they can enable fine-grained DLP controls as well.

Customers can also set up stringent data protection policies using Zscaler DLP policies. Since OpenAI is available over HTTPS, Zscaler’s inline SSL decryption provides complete visibility into the content/queries that users post to the ChatGPT site and the downloaded content.

This short demo video demonstrates how Zscaler DLP is blocking users from uploading credit card numbers to the ChatGPT site. The Zscaler DLP simply applies policy inline to prevent data loss and the same function would apply to source code via Zscaler Source Code dictionaries.

Insight into ChatGPT usage and queries

Additionally, many customers asked if they could also view ‘what queries our employees were posting to ChatGPT whether DLP triggers on it or not?” We absolutely allow for it with the integrated Incident receiver with Zscaler’s DLP policies.

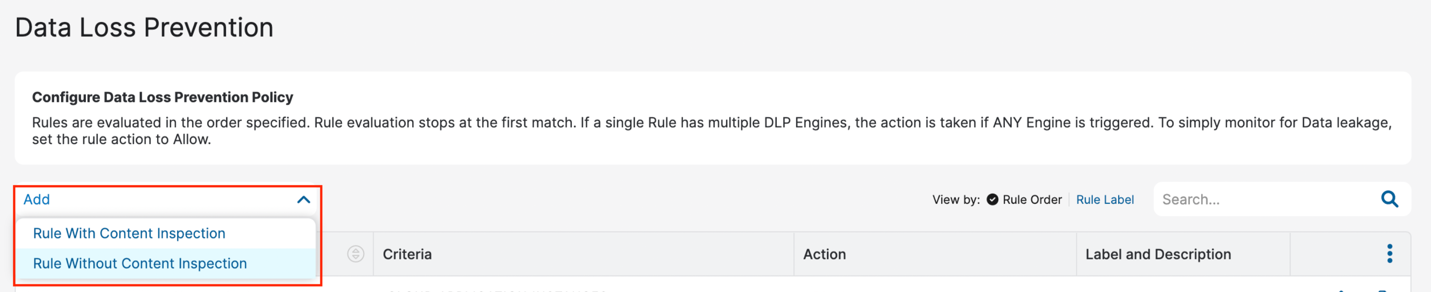

This short demo video illustrates how to configure a “DLP rule without content inspection” in the data protection policy. That means you can capture any HTTP POST request made to the ChatGPT application greater than 0 bytes and send it to Zscaler Incident Receiver, which, in turn, can send any captured POST to ChatGPT to the enterprise incident management tool or an auditor’s mailbox.

This capability can give you peace of mind and allows your organization to safely enable ChatGPT within their environment for the employees.

Cyber security concerns with ChatGPT

Though ChatGPT and many tools like it have been built to prevent misuse and prevent the creation of malicious code on their platform, they could be tricked to generate code for ethical hacking or penetration testing and then that code can be tweaked to create malware. Publicly known examples of such malware might not exist yet, but there is enough chatter on the dark web to keep an eye on it.

Additionally, a lot of email security products use NLP processing techniques to determine phishing or social engineering attacks. ChatGPT can help write perfect English emails and avoid spelling mistakes or grammatical errors making it hard for such controls to be not very effective.

Next steps

The adoption of ChatGPT and generative AI is heading to the mainstream, and there is a likelihood that an ‘enterprise version’ will soon emerge that will allow organizations to extend existing cyber and data security controls such as CASB, data at rest scanning, SSPM, etc.

We at Zscaler have been harnessing the power of AI/ML across the platform to solve hard problems ranging from cyber security to AIOps for the last few years. Our machine learning capabilities classify newly discovered websites, detect phishing and Botnets, catch entropy on DNS traffic to detect malicious DNS, and even help automate root cause analysis to troubleshoot user experience issues more proactively.

We recently showcased a prototype of ZChat, a digital assistant for Zscaler services. Now we are looking at extending this technology to cyber security and data protection use cases and will announce a few fascinating generative AI innovations in our upcoming Zenith Live conference.

Stay tuned and I look forward to seeing you at the event.