/ What Is Generative AI in Cybersecurity?

What Is Generative AI in Cybersecurity?

Generative AI in cybersecurity is a powerful tool for both defenders and attackers. GenAI drives large language models (LLMs) that can help security teams improve policy, threat detection, vulnerability management, and overall security posture. However, it can also help threat actors launch faster, more dangerous attacks.

Understanding Generative AI

Generative artificial intelligence (GenAI) influences the cybersecurity landscape in many important ways. However, before we dive into that, we need to cover what it is and its use cases. At its core, generative AI is a type of machine learning technology that can produce written content in natural language, images, and, in some cases, videos—sometimes with only minimal human input.

For most GenAI use cases, a human user must prompt the AI engine to create the content in question, with some exceptions to be made for advanced enterprise technologies. For example, a person could type “Write a story about a GenAI cyberattack” into a text prompt generator, and the LLM will quickly produce such a story. The same goes for images; tell an AI image generator to “create a picture of a futuristic data center”, and it will do just that.

GenAI enables a bevy of use cases for professionals in any industry, helping everyday users break new ground and increase efficiency in terms of content creation. That said, for the purposes of this article, we will be looking at generative AI only as it relates to cybersecurity.

How Can Generative AI Be Used in Cybersecurity?

Generative AI can be used to assist in both defensive and offensive cybersecurity efforts. Organizations can deploy GenAI platforms in cybersecurity to:

- Protect sensitive data even more effectively: Prevent data leaks while retaining AI prompts and the output of AI apps for security and audits.

- Strengthen security against emerging threats: Gain a more proactive security posture as AI helps detect and block emerging web- and file-based attacks.

- Ensure secure use of tools like ChatGPT: Get granular control over AI application usage with the ability to set different policies for different users.

- Limit risky actions in AI apps: Prevent actions that put data at risk, like uploads, downloads, and copy/paste.

What Are the Implications of AI In Cybersecurity?

ChatGPT usage has increased 634% (Apr 2023 - Jan 2024), meaning its influence will bleed into more industries including cybersecurity. With the crux of cybersecurity based in content (i.e., potential cyberthreats and attacks coming through web traffic, emails, etc.), large language models will be primed to analyze information regarding traffic and emails to help companies predict and prevent cyber events.

Having said that, security teams will need to be prepared to fight fire with fire. Threat actors and groups will also take it upon themselves to use GenAI to gain the upper hand against organizations’ cyber defenses. According to Microsoft, AI-generated cyberattacks are “early-stage” and “neither particularly novel (nor) unique”. In an effort to thwart these actors, OpenAI is shutting down their accounts, but as the number of threat groups continues to grow, these accounts will be harder to track and close.

What’s more, it’s only a matter of time before world governments step in and set guidelines for GenAI use on an organizational level. For now, there’s much unknown where GenAI is concerned, but eventually, a data breach or leak may occur and cost a business or businesses millions of dollars, forcing the government to step in and regulate.

4 Benefits of Generative AI in Cybersecurity

With the right approach, GenAI can provide significant benefits to an organization when it comes to cyberthreat detection and response, security automation, and more.

Improved Threat Detection and Response

Generative AI can analyze data that represents “normal” behavior to set a baseline by which to identify deviations that indicate potential threats. On top of that, AI can generate malware simulations to understand its behavior and identify new threats.

Enhanced Predictive Capabilities

Generative AI can ingest large amounts of data to create a frame of reference for future security events, enabling predictive threat intelligence and even vulnerability management.

Automation of Repetitive Tasks

GenAI can predict vulnerabilities and recommend or automate patches for applications based on historical data pertaining to those applications. It can also automate incident response, reducing the need for human intervention.

Phishing Prevention

GenAI can assist in creating models that detect and filter out phishing emails by analyzing language patterns and structures to identify emails attempting to disguise language. It can also create models for analyzing and blocking malicious URLs.

Challenges and Risks

GenAI offers unprecedented potential for content creation and organizational efficiency at large, but it’s important to understand the technology’s hurdles, as well.

Abuse by Cybercriminals

GenAI tools are available to anyone and everyone, and while well-intending organizations will use it to increase efficiency or improve cybersecurity, threat actors will use it just as well to cause harm. Already, Microsoft and OpenAI have discovered attempts by threat groups to “organize offensive cyber operations.”

Data Quality Issues

Organizations that are unable to provide high-quality data for training an AI platform will experience a lack of efficacy in terms of the results they get from their use of the platform. What’s more, if a business tries to train a GenAI platform using GenAI-created data, the resulting data will be even more garbled—a recipe for disaster when it comes to cybersecurity.

Technical Limitations

Generative AI models require large amounts of data to train effectively. As such, businesses with limited access to data—or those in niche markets, may struggle to gather sufficient training datasets. On top of that, the strain GenAI applications place on resources and the amount of maintenance they require create more hurdles to jump.

Best Practices

GenAI is new to everyone, so leaders must take care in how they approach its use within an organization. Here are some of the best ways to protect your employees and business when it comes to GenAI.

- Continually assess and mitigate the risks that come with AI-powered tools to protect intellectual property, personal data, and customer information.

- Ensure that the use of AI tools complies with relevant laws and ethical standards, including data protection regulations and privacy laws.

- Establish clear accountability for AI tool development and deployment, including defined roles and responsibilities for overseeing AI projects.

- Maintain transparency when using AI tools—justify their use and communicate their purpose clearly to stakeholders.

Explore the 2024 Zscaler ThreatLabz AI Security Report for more guidance on safe AI use and protecting from AI-based threats.

The Future of AI in Cybersecurity

As we mentioned above, we will eventually start to see compliance regulations bearing down on the use of GenAI. Having said that, GenAI will continue to innovate upon itself, and cybersecurity teams will be jumping to take advantage of these innovations.

Here are some of the ways AI is projected to help security and compliance teams succeed:

- Geo-risk analysis: Companies will eventually be able to use AI to analyze geopolitical data and social media trends to foresee regions at a higher risk of carrying out an attack.

- Behavioral biometrics: Analysis of patterns in user behavior such as keystrokes and mouse movements can be used to detect anomalies that may indicate malicious or fraudulent activity.

- Content authentication: AI will be able to verify the authenticity of audio, video (such as deepfakes), or text to counter the spread of misinformation.

- Compliance automation: AI will be able to regularly scan systems and processes to ensure they meet all regulatory requirements, even as they evolve.

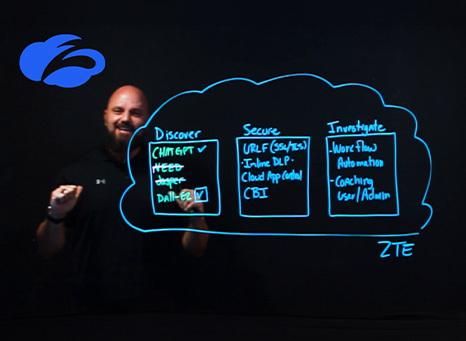

How Zscaler Secures Generative AI

The Zscaler Zero Trust Exchange™ lets you create and enforce policies around the generative AI sites your users can visit and how they can interact—directly or via browser isolation—to protect your sensitive data.

With Zscaler, your users can leverage safely and securely with innovations that let you:

- Understand ChatGPT usage: Keep full logs that show usage activity and prompts in ChatGPT

- Control specific AI apps: Define and enforce which AI tools your users can and cannot access

- Integrate full data security into AI usage: Ensure sensitive data never leaks through an AI prompt or query

- Restrict AI tool data uploads: Implement granular controls that allow prompts but prevent bulk uploads of important data

Request a demo to learn how Zscaler can help you control the use of generative AI without putting your sensitive data on the line.

Check out our predictions for cybersecurity 2025 and find out how GenAI could be used against you.