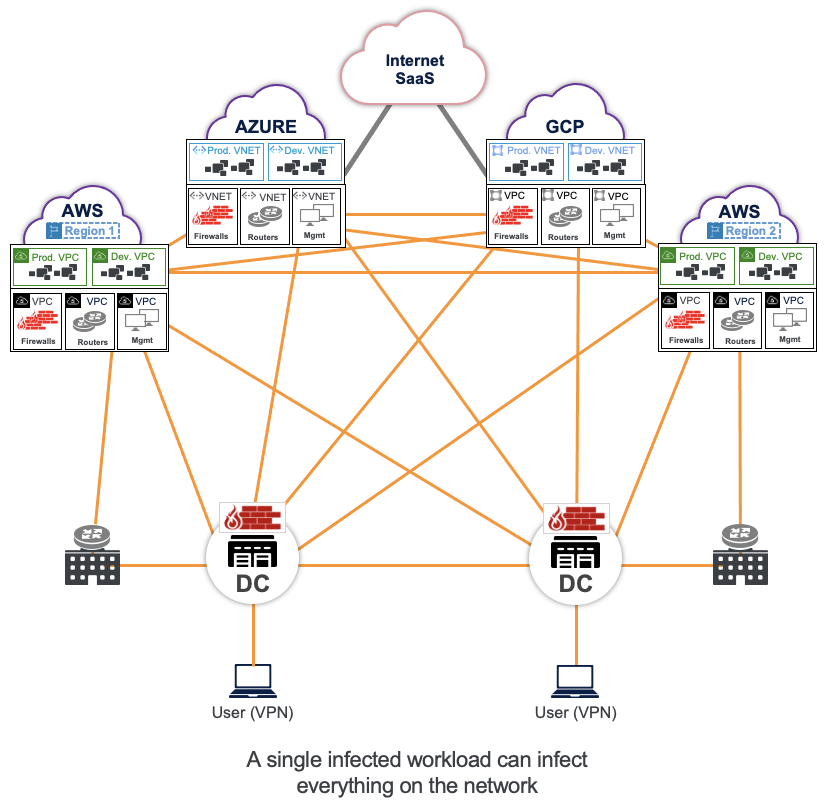

The cloud has become the new data center for most enterprises, with multi-cloud environments increasingly becoming the norm. Cloud workloads have to communicate both with each other and to the internet securely. Unfortunately, the available options to build secure cloud-to-cloud and cloud-to-internet connectivity using firewalls and VPNs to extend the corporate WAN to the cloud, create security risks, operational complexity, and performance challenges.

Challenges with extending the corporate WAN to the cloud

Traditionally, two different environments are connected using routers. Because these routers are used to terminate VPN or IPSec tunnels and exchange routes, each of these environments have access to all the IP addresses in the other environments. To control access between these environments, firewalls are used to provide static access control to ensure only specific IPs in one environment have access to specific IPs in other environments. This is the legacy IP & firewall approach that most enterprises have had no choice but to adopt.

The IP & firewall approach creates security risks because the flat, IP full-mesh connectivity makes networks susceptible to the lateral movement of threats.

This approach also creates operational complexity with ephemeral workloads in the cloud. When IPs come and go, every new IP needs to be propagated by routers. Any new IP needs to be programmed into the firewall, and if there is overlap in these IPs, it has to be resolved before it can connect to these networks.

Lastly, this approach presents scale and performance challenges such as route scaling, throughput bottlenecks, and higher latency.

These challenges become increasingly daunting as more applications become distributed over multiple clouds and the adoption of cloud-native services such as containers, PaaS, and serverless.

With a legacy approach, all traffic bound to the internet or other workloads in a different cloud has to go through a centralized hub, and this hub has firewalls, Squid proxies, routers, etc— similar to a castle-and-moat architecture in a data center. Specific limitations include:

1. Limited security posture:

For a complete security posture, additional capabilities such as proxy and data protection are required since SSL inspection at scale is not possible with virtual firewalls. This results in additional virtual appliances for Squid proxies, sandboxing, etc.

2. Risks with full mesh networking:

To enable workload communication across a multi-cloud environment, the legacy IP architecture distributes routes and shares IP and subnet information across different environments, creating a flat network and static firewall rules that are easy to evade which can lead to lateral threat movement.

3. Performance limitation of appliances:

Throughput is limited by the weakest link—in this case, the scale and performance of the firewall. The more security services you enable on firewalls, such as SSL inspection of content, the slower the performance.

4. Overhead of orchestration and management:

Legacy firewalls require additional VMs for orchestration, policy, operations, and license management, which adds another layer of complexity and cost. Replicating this for each cloud provider adds an additional burden for operations across multiple clouds.

5. Blind spots due to multi-hops to a destination:

Legacy, IP-based approaches need multiple tools such as firewalls, SD-WAN routers, NACLs, and security groups. Each of these require their own logging, and improper log correlation creates additional blind spots for networking and security teams.

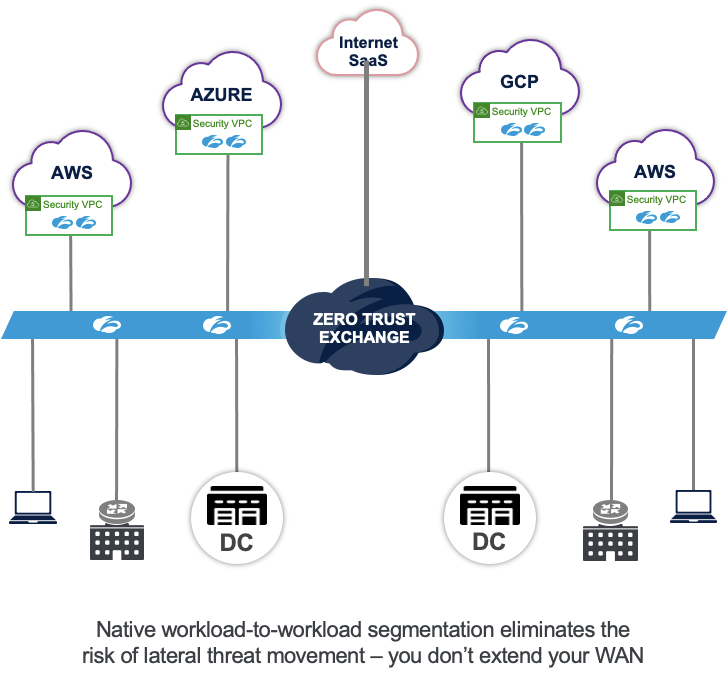

Extending zero trust to cloud workloads

Fortunately, we have already seen the corporate WAN challenge play out for employee access over the past several years. To overcome the security and performance shortcomings of the IP and firewall approach, an increasing percentage of enterprises have adopted a zero trust strategy. Those same zero trust principles, reinvented for the specific needs of cloud workloads, are the way forward for multi-cloud networking.

With zero trust, networks are never trusted, so IP and routing information is never exchanged. Connectivity is enabled at a granular level for workloads based on identity and context, so there is no need to create static firewall rules to restrict the IP access between the environments.

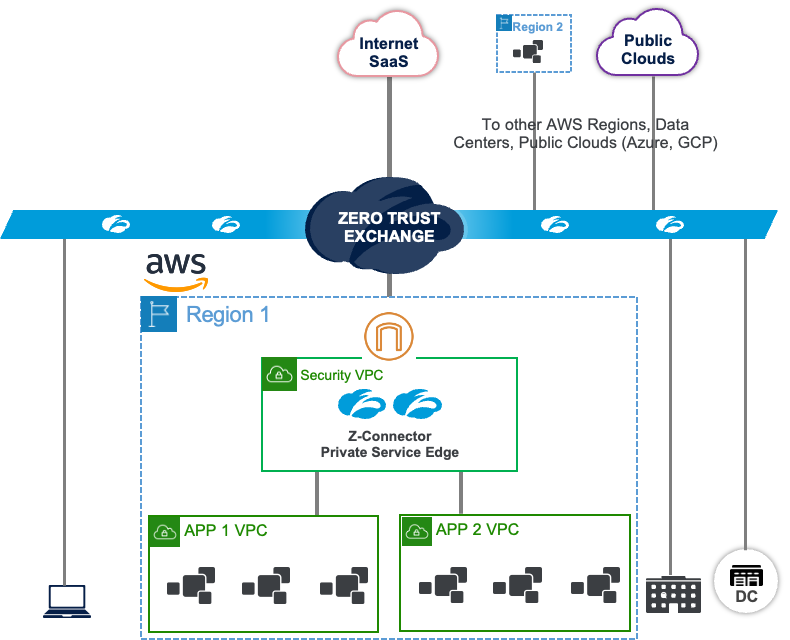

Zscaler Internet Access (ZIA) and Zscaler Private Access (ZPA) are the market leaders in securing end-user access to the internet and private applications. Zscaler’s Workload Communications, enabled by Cloud Connector, extends these solutions for securing workload access to the internet (ZIA for workloads) and securing private access to remote private workloads (with ZPA for workloads). This groundbreaking new approach simultaneously improves security, reduces operational complexity, and improves application performance.

Use case 1: Enabling zero trust for workloads-to-internet communication

Cloud Connector enables workloads to connect directly to the Zscaler cloud for internet access. All security services, such as SSL proxy, data protection, and advanced threat protection, run natively in the Zero Trust Exchange. With this architecture, you can apply the same security policy for all workloads accessing the internet from any cloud. This is in stark contrast to legacy architectures where you need to build a castle and moat architecture with firewalls and Squid proxies in every cloud.

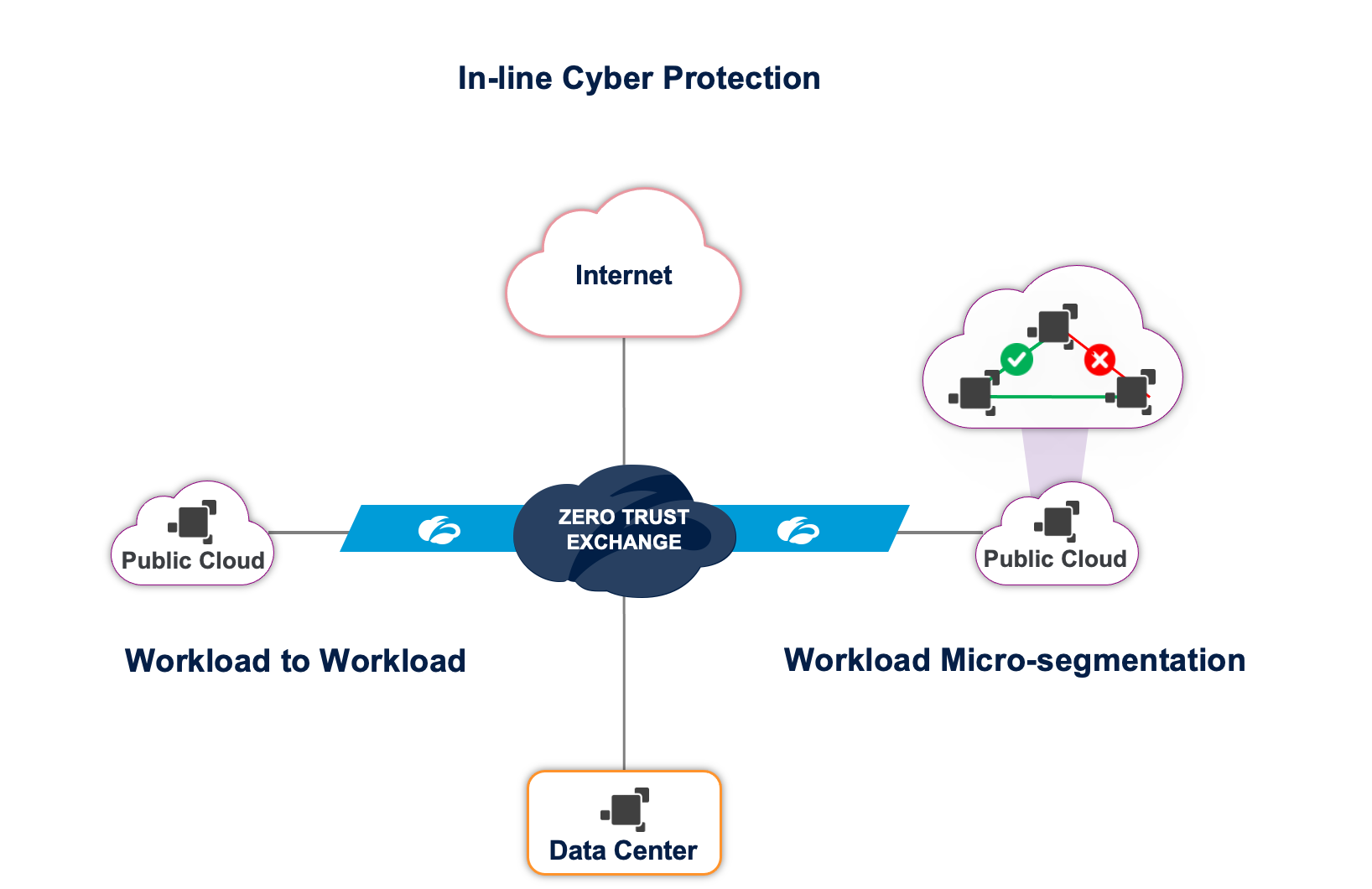

Use case 2: Enabling zero trust for workload-to-workload communication in a multi-cloud environment

Cloud connector enables workloads to connect directly to the Zscaler cloud. Workloads from any cloud (AWS, Azure, data center) can communicate with each other over the Zero Trust Exchange. The Zero Trust Exchange uses identity and context to verify trust and then establish the connection. This is in stark contrast with legacy architectures, where IP routing is required between these cloud environments.

Use case 3: Enabling zero trust for workload-to-workload communication within a VPC/VNET

Zscaler Workload Segmentation takes segmentation to a granular level, inside a virtual machine at an individual application or process level. A workload segmentation agent delivers software identity by generating fingerprints at a process level. Automated machine learning discovers all the available paths to a particular process or application and comes up with a recommendation on allowed paths, pruning out all unnecessary paths that increase the risk of lateral threat movement. This is in contrast with legacy architectures, where static ACLs (Access Control Lists) and SG (Security Groups) are used, which an attacker can easily evade by piggybacking on an existing rule.

How zero trust solves IP & firewall limitations

When we explored legacy architectures, the common underlying problem is IP connectivity. Traditionally, networking and security are viewed as separate functionalities. Connectivity (IP routing) is enabled first and then security (firewall filtering) is enabled on top. Some vendors have integrated both into a single appliance, but the approach and architecture are the same.

Zscaler’s Zero Trust Exchange takes a uniquely different approach: security first and connectivity later. Why create unnecessary connectivity that you'll need to block later?

In Zscaler's Zero Trust approach, we trust no one. The only default connectivity for the source is the Zero Trust Exchange, not to the destination application. All workload traffic is forwarded to Cloud Connector which then connects these workloads to the Zero Trust Exchange.

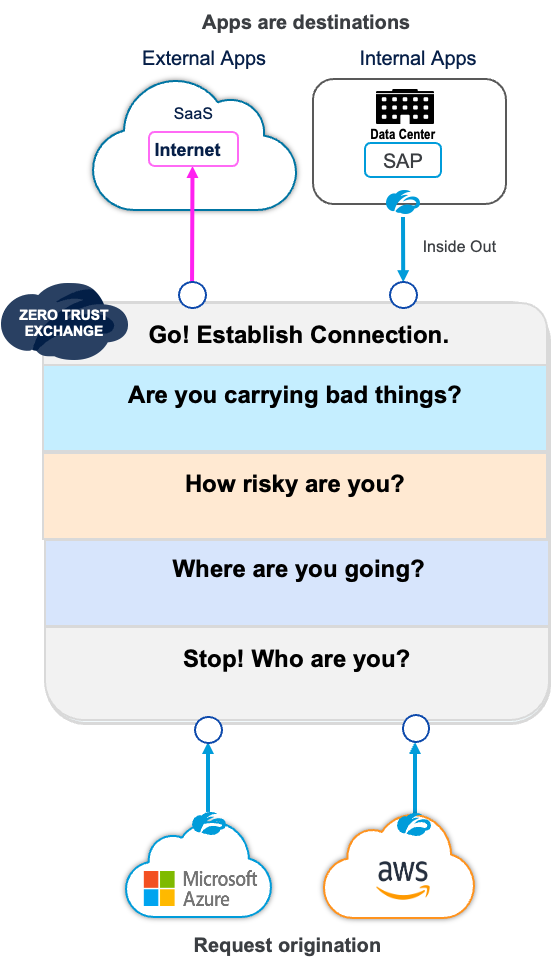

The Zero Trust Exchange goes through the following steps before the source and destination are connected:

Step 1: Who are you?

The Zero Trust Exchange verifies where the workloads are coming from such as an authenticated and registered Cloud Connector, or a customer VPC or VNET.

Step 2: Where are you going?

You can define policy based on destination—the Zero Trust Exchange can check if the destination is a sanctioned application or unsanctioned application.

Step 3: How risky are you?

You can have a policy based on source VPC/VNET. Only enterprise VPCs are trusted with access to certain destinations and partner VPCs/VNETs, which are considered risky, cannot be given access to those destinations.

Step 4: Are you carrying bad things?

For untrusted internet destinations, you can enable full SSL proxy and inspect everything that is coming from the source to the destination and vice-versa.

Step 5: Establish the connection

For internet destinations, we proxy and establish a connection to the destination. For private application access, we have an inside-out connection from the Application Connector at the destination. The Zero trust Exchange is the rendezvous point and establishes this connection.

Key Benefits of Applying Zero Trust to Cloud Workloads

1. Cyber threat protection for all workloads:

Prevent compromise for workloads:

Cyber inspection is done for all workload communications to the internet which protects workloads against threats such as botnets, malicious active content and fraud.

Prevent lateral movement of threats across clouds:

With zero trust connectivity, each environment (datacenter, cloud regions, etc.) is isolated and any destination in other environments are non-routable. This prevents any lateral movement of threats across environments.

Prevent lateral movement of threats inside a datacenter or within a cloud region:

Identity-based microsegmentation at a process level prevents any lateral movement of threats within a datacenter or within a cloud region.

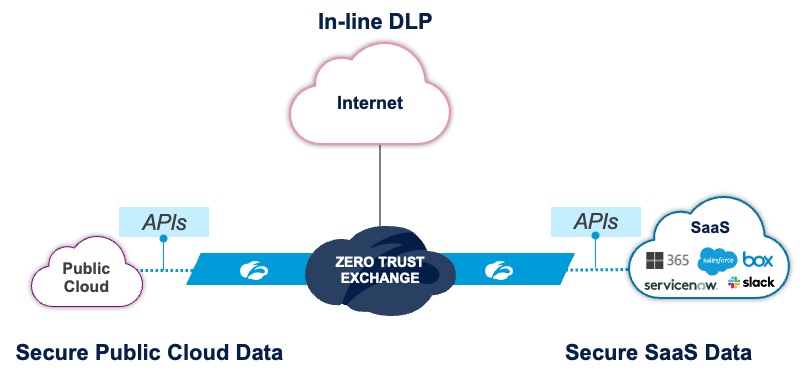

2. Securing data in SaaS and Public Clouds:

Securing Public Cloud Data:

Ensure least-privilege access to workloads by minimizing entitlements (CIEM) and reduce risk of compromise by remediating misconfigurations (CSPM).

Securing SaaS data:

Gain visibility into shadow IT operations to prevent usage of unsanctioned applications and prevent oversharing (API CASB).

Inline DLP:

Prevent data breaches or exfiltration by ensuring all workload traffic going through the internet is inspected by DLP.

3. Hyper-scale for performance & scale:

Zscaler has more than 150 Zero Trust Exchange service POPs. These POPs peer with all major cloud providers and each of them has an operating capacity of terabytes. By default, Cloud Connector connects to the POP that has the best availability and closest proximity.

4. DevOps-ready & fully automated:

Management and policy are centrally managed and cloud-delivered—any and all upgrades for the Cloud Connectors are managed by Zscaler. Terraform-enabled orchestration can be readily integrated with devops CI/CD pipeline. Platform native automations, such as Cloudformation and Azure Resource Manager (ARM) templates, are also provided out of the box.

5. Full logging & log streaming:

All outgoing traffic from any Cloud Connector— including traffic going to the Zero Trust Exchange—is logged and can be streamed to a SIEM/log aggregator of your choice. More sophisticated filtering is available for streaming to critical locations.

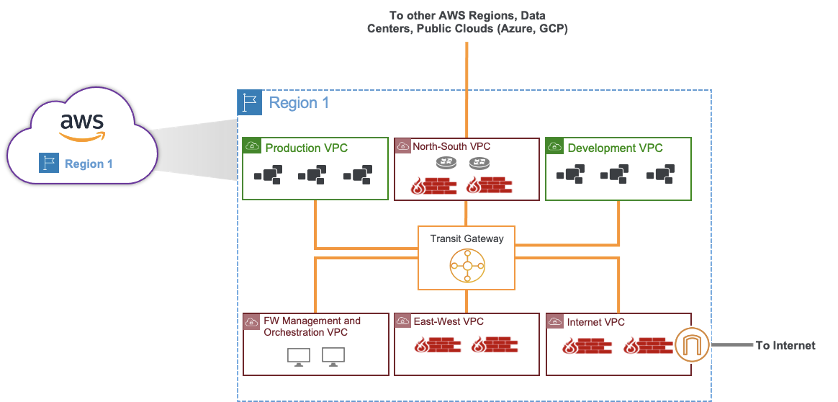

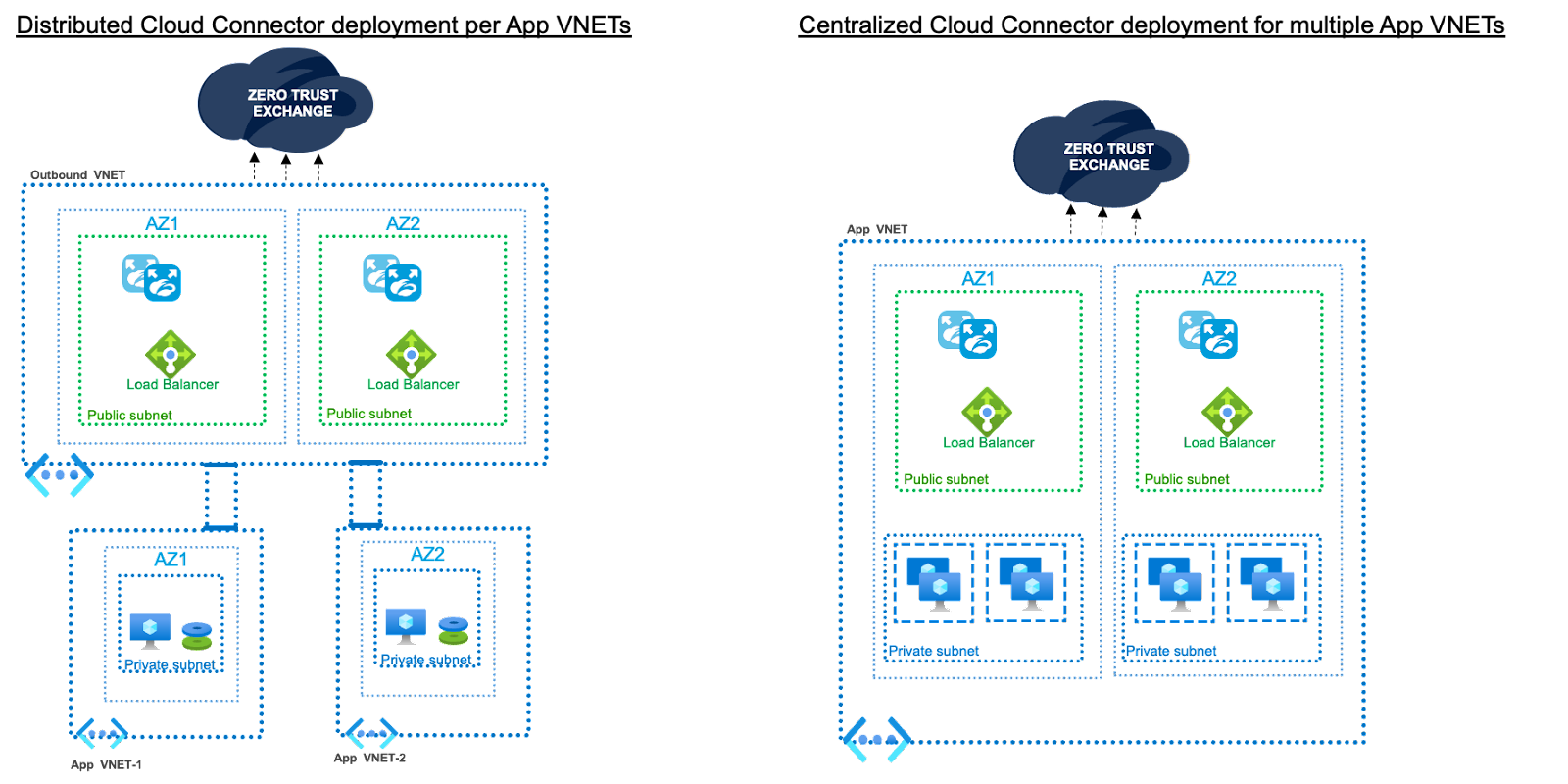

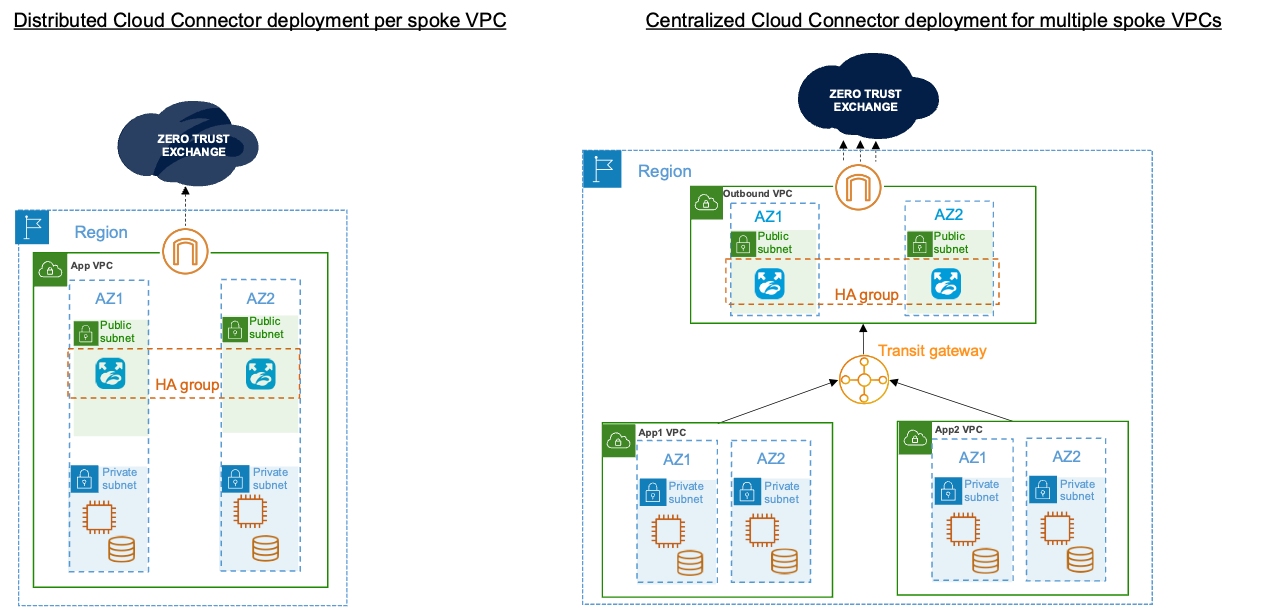

Deploying Cloud Connectors

The Cloud Connector can either be deployed in each VPC/VNET if isolation at a VPC/VNET is required, or it can be deployed in a central location if isolation is required for a group of VPCs/VNETs. Deployment models have the flexibility to support both centralized and distributed deployments.

In summary, workloads are considered as mirror images of users. By moving to a zero trust architecture for workload communications, you can protect your data and workloads, eliminate attack surfaces, and stop the lateral movement of threats. We welcome you to join us on this zero trust journey to transform your cloud connectivity. To schedule a demo, you can reach out to us at ask-cloudprotection@zscaler.com.