Blog Zscaler

Ricevi gli ultimi aggiornamenti dal blog di Zscaler nella tua casella di posta

Generative AI: 5 Enterprise Predictions for AI and Security — for 2023, 2024, and Beyond

Trends/Predictions

- Enterprise use of AI tools will only grow, with industries like manufacturing leading the charge

- Enterprises will secure AI/ML applications to stay ahead of risk

- Enterprises will seek visibility and intelligent access controls around AI and ML applications

- AI will become a key component of enterprise data protection

- AI will transform how enterprises understand risk and security from the top down

We’ve entered the era of widespread adoption for generative AI tools. From IT, to finance, marketing, engineering, and more, AI advances are causing enterprises to re-evaluate their traditional approaches to unlock the transformative potential of AI. Our recent data analysis of AI/ML trends and usage confirms this: enterprises across industries have substantially increased their use of generative AI, across many kinds of AI tools.

What can enterprises learn from these trends, and what future enterprise developments can we expect around generative AI? Between our research and dozens of conversations with customers and partners, there are a number of trends that we can expect to see this year, in 2024, and onward.

Enterprise use of AI tools will only grow, with industries like manufacturing leading the charge

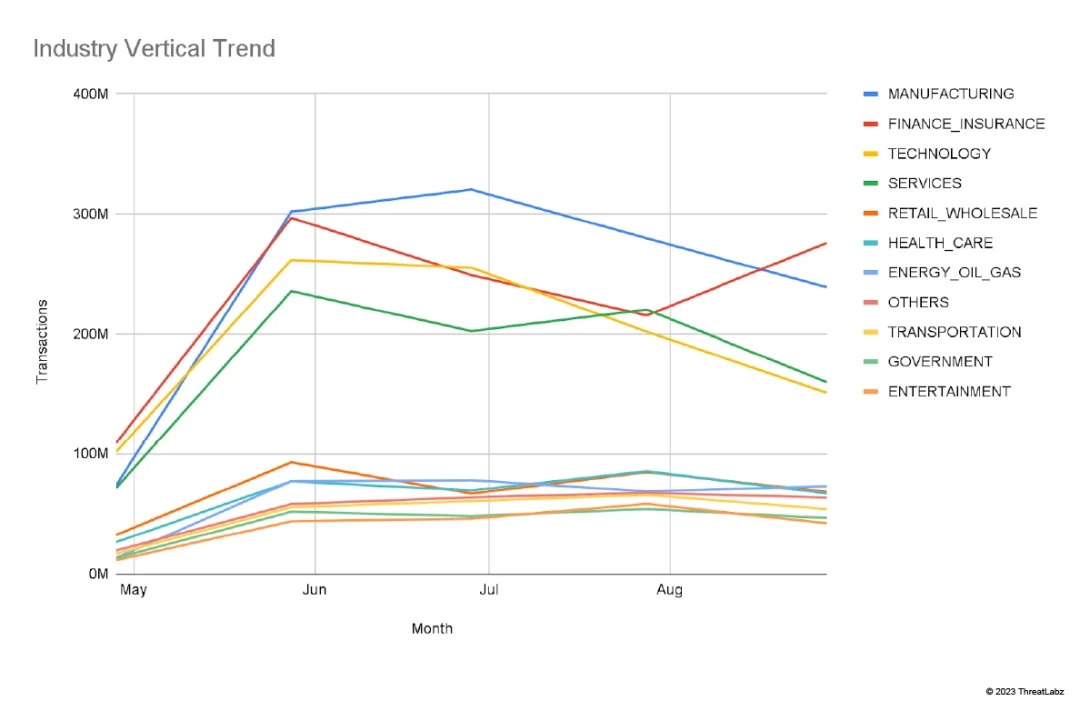

Our research shows that, mirroring the broader AI trend, enterprises across industry verticals sharply increased their use of AI from May 2023 to June 2023, with sustained growth through August 2023. We see that the majority of AI/ML traffic is being driven by manufacturing, which may offer a glimpse into the rapid innovation and transformation driven by Industry 4.0. Indeed, its substantial engagement in these tools highlights the likely key role that AI and ML will play in the future of manufacturing.

Figure 1: Breakdown of AI popularity by industry vertical

Figure 1: Breakdown of AI popularity by industry vertical

Other industries, like finance, have shown steep growth in the use of AI/ML tools, largely driven by the adoption of generative AI chat tools like ChatGPT and Drift. Indeed, since June 2023, the finance sector has experienced continuous growth in these areas.

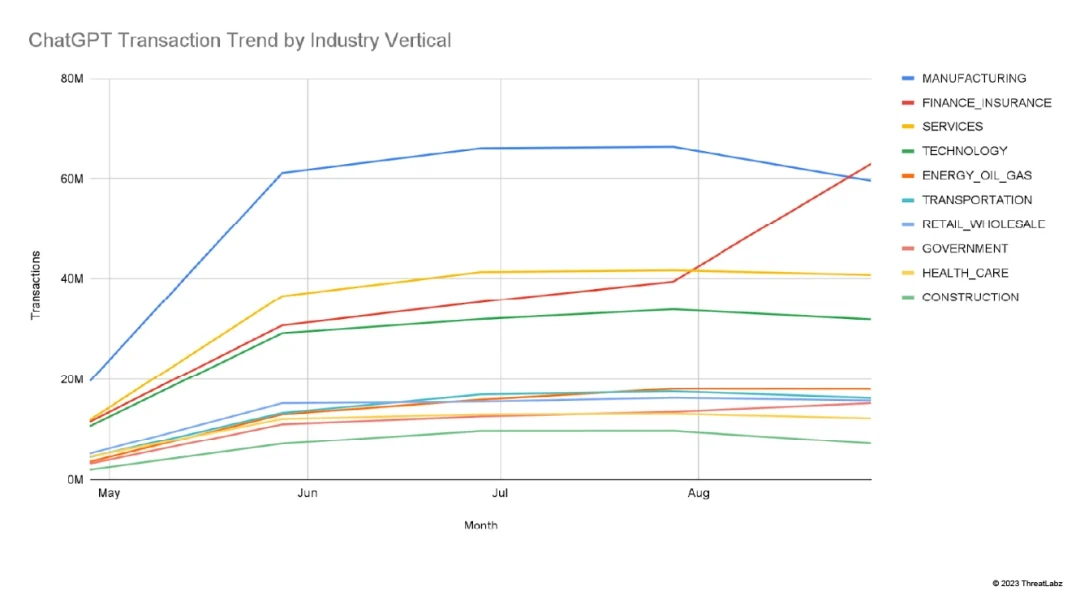

Figure 2: ChatGPT transaction trends by industry vertical

Figure 2: ChatGPT transaction trends by industry vertical

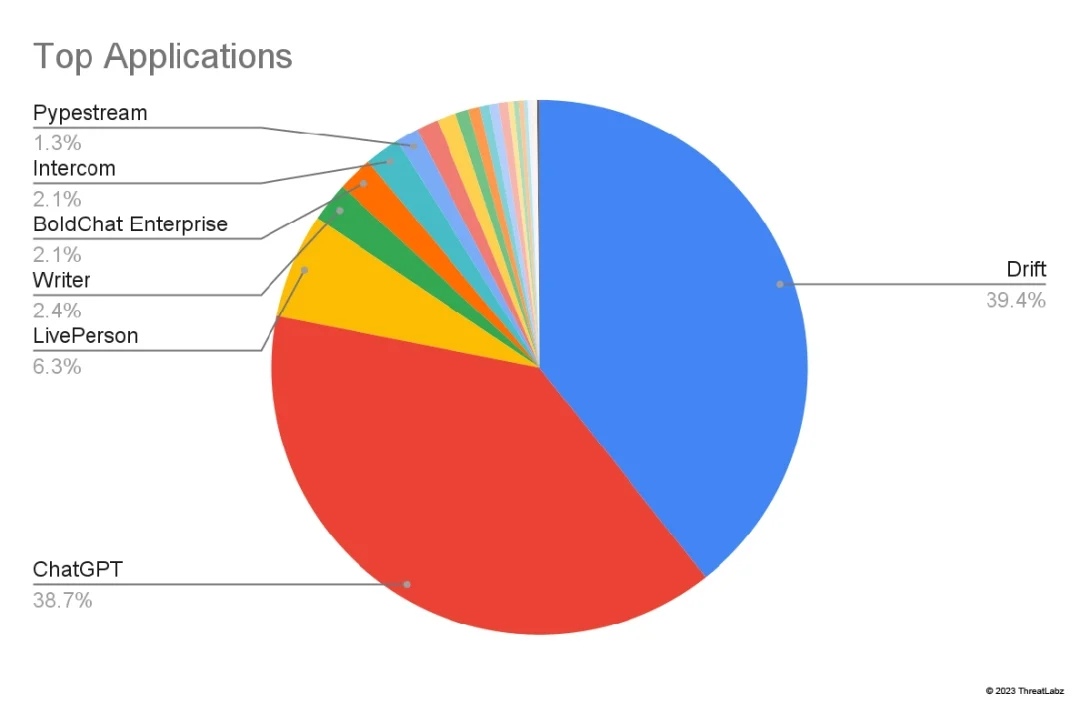

Unsurprisingly, OpenAI.com has emerged as a driving force, accounting for 36% of the AI/ML traffic we observed. Of that 36% observed, 58% of traffic to that domain can be attributed to ChatGPT. However, when it comes to the most popular tool in use, Drift takes the crown, followed by ChatGPT and tools like LivePerson and Writer. As new AI use cases continue to emerge, it is likely that we will see enterprises adopt AI — not merely in leveraging generative AI chat tools, but as a core driver of business that can create competitive differentiation.

Figure 3: Pie chart of top AI applications

Figure 3: Pie chart of top AI applications

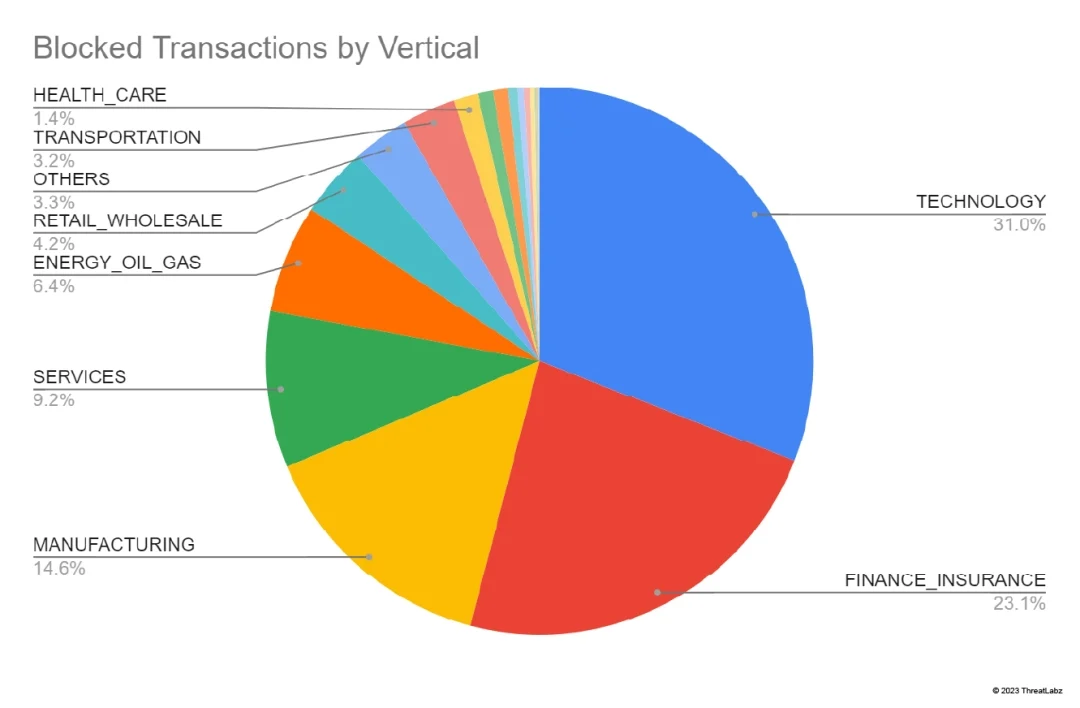

Enterprises will work to secure AI/ML applications to stay ahead of risk

Our research also found that as enterprises adopt AI/ML tools, subsequent transactions undergo significant scrutiny. Overall, 10% of AI/ML-related transactions are blocked across the Zscaler cloud using URL filtering policies. Here, the technology and finance industries are leading the charge, accounting for more than half of blocked transactions. Interestingly, Drift holds the distinction of being the most blocked, as well as most used, AI application. In all likelihood, we will see other industries take their lead to ensure that enterprises can minimize the risks associated with AI and ML tools.

Figure 4: Blocked transactions by industry vertical

Figure 4: Blocked transactions by industry vertical

The risks of leveraging AI and ML tools

As we discussed in a recent blog, the risks of using generative AI tools in the enterprises are significant. In general, they fall into two buckets:

1. The release of intellectual property and non-public information

Generative AI tools can make it easy for well-meaning users to leak sensitive and confidential data. Once shared, this data can be fed into the data lakes used to train large language models (LLMs), and can be discovered by other users. For example, a backend developer who queries ChatGPT, “Can you take this [my source code for a new product] and optimize it?” Or, a sales team member inputs the prompt, “Can you create sales trends based on the following Q2 pipeline data?” In both cases, sensitive information or protected IP may have leaked outside the organization.

2. The data privacy and security risks of AI applications themselves

Not all AI applications are created equal. Terms and conditions can vary widely among the hundreds of AI/ML applications in popular use. Will your queries be used to further train an LLM? Will your data be mined for advertising purposes? Will it be sold to third parties? These are questions enterprises must answer. Similarly, the security posture of these applications can also vary, both in terms of how data is secured and the overall security posture of the company. Enterprises must be able to account for each of these factors, assigning risk scores among hundreds of AI/ML applications, to secure their use.

Enterprises will seek visibility and intelligent access controls around AI and ML applications

As a corollary to this last trend, it’s likely that enterprises will continue to seek precise controls for their AI/ML applications. For many enterprises, visibility will be the starting point of creating an AI security policy, followed by the adoption of intelligent, granular access controls to ensure that users can embrace these tools in an approved, secure fashion. There are a number of key questions that enterprises will want to answer, including:

- Do I have deep visibility into employee AI app usage? Enterprises will seek complete visibility into the AI/ML tools in use. In addition, they will monitor corporate traffic and transactions to these applications.

- Can I allow access to only certain AI apps? Enterprises will want to allow granular access to approved AI applications at the department, team, and user levels. Moreover, they will want to use URL filtering to broadly block access to unsafe or unwanted AI/ML tools. In addition, enterprises may consider the ability to allow ‘cautioned’ access—where users may use a specific tool, but they are coached around the risks and limitations of using it.

- Which AI applications protect private data? Enterprise must understand the security posture of the AI apps that employees are using, among the hundreds of AI tools in everyday use. In order to appropriately configure access policies, enterprises must understand which of these applications will protect their data and assess the security of the organizations creating/managing these applications.

- Can I prevent data from leaving the organization? Preventing data loss will be a key factor in embracing generative AI. Enterprises will likely gravitate to data loss prevention (DLP) technologies that allow them to create policies preventing the leakage of sensitive data like source code, structured data like credit card information, and PII. In a more sophisticated fashion, it will also become more common for enterprises to block risky user actions when using generative AI tools that are key contributors to data loss — like copy & paste, and uploads and downloads.

AI and ML will become a key component of enterprise data protection

As enterprises recognize the need to prevent data loss across their footprint, it is likely that they will increasingly leverage AI as a means of identifying and protecting their data. In conversations with customers about preventing data leakage, one challenge that frequently surfaces is that enterprises often lack visibility into all the places where their critical data lives, and are thus unable to classify and protect it using technologies like DLP. DLP remains an attractive option in the context of generative AI — we have a video here showing how DLP blocks source code from being entered into ChatGPT, for instance. However, lacking a complete understanding of their data, enterprises can find it challenging to create policies that prevent leakage when using these tools.

As a result, we anticipate that enterprises will increasingly leverage AI as a way to gain data visibility and improve their data hygiene. Using ML, for instance, it is now possible to discover and classify sensitive data automatically, such as financial and legal documents, PII and medical data, and much more. From there, enterprises will take the next step and use these ML-driven data categories as the basis of DLP policy — thus preventing data loss when using tools like ChatGPT.

AI will transform how enterprises understand risk and security from the top down

We’ve talked about how enterprises are adopting generative AI tools to transform business. Broadening out, it’s also possible to anticipate the ways AI will become a core business function, specifically from the perspective of risk and security. While enterprises currently leverage AI to unleash new potential and insights across IT, technology, marketing, customer experience, and more, they will increasingly look to AI and ML to transform how they view risk. Here are three key roles that AI will increasingly play for security:

- Provide a comprehensive view of risk. In conversations with customers, we hear that enterprises commonly have limited visibility and fragmented or delayed data around enterprise risk. In much the same way that AI can help discover and classify data, we predict enterprises will use AI to visualize and quantify risk across their entire footprint. This includes gaining comprehensive insights and risk scoring across their attack surface and across their business entities —including their workforce, applications, assets, and third parties.

- Deliver top-down visualization and reporting. Similarly, enterprises will leverage AI to gain top-down and board-level visualizations of their risk — a critical but rarely-achieved goal. Enterprises will use these insights to uncover and drill down into their top contributing factors to risk, including the ability to quantify the financial impact of exposures.

- Drive prioritized remediation. Finally, enterprises will seek AI tools that allow them to automatically gain prioritized security actions and policy recommendations, which are tied to their key risk drivers and which quantifiably improve the security of their organization.

Want to learn more about how enterprises can better embrace AI and solve its risks? Tune in to our webinar, AI vs. AI: Harnessing AI Defenses Against AI-Powered Risks.

Questo post è stato utile?

Esclusione di responsabilità: questo articolo del blog è stato creato da Zscaler esclusivamente a scopo informativo ed è fornito "così com'è", senza alcuna garanzia circa l'accuratezza, la completezza o l'affidabilità dei contenuti. Zscaler declina ogni responsabilità per eventuali errori o omissioni, così come per le eventuali azioni intraprese sulla base delle informazioni fornite. Eventuali link a siti web o risorse di terze parti sono offerti unicamente per praticità, e Zscaler non è responsabile del relativo contenuto, né delle pratiche adottate. Tutti i contenuti sono soggetti a modifiche senza preavviso. Accedendo a questo blog, l'utente accetta le presenti condizioni e riconosce di essere l'unico responsabile della verifica e dell'uso delle informazioni secondo quanto appropriato per rispondere alle proprie esigenze.

Ricevi gli ultimi aggiornamenti dal blog di Zscaler nella tua casella di posta

Inviando il modulo, si accetta la nostra Informativa sulla privacy.