Background and Recap of Part 1

In Part 1 of this blog series, Internet Egress Security Architecture for AWS Workloads - Regional Hubs, I discussed the complexities organizations encounter when securing AWS workloads, how Zscaler can help, and the details surrounding a hub-and-spoke architecture with AWS Transit Gateways.

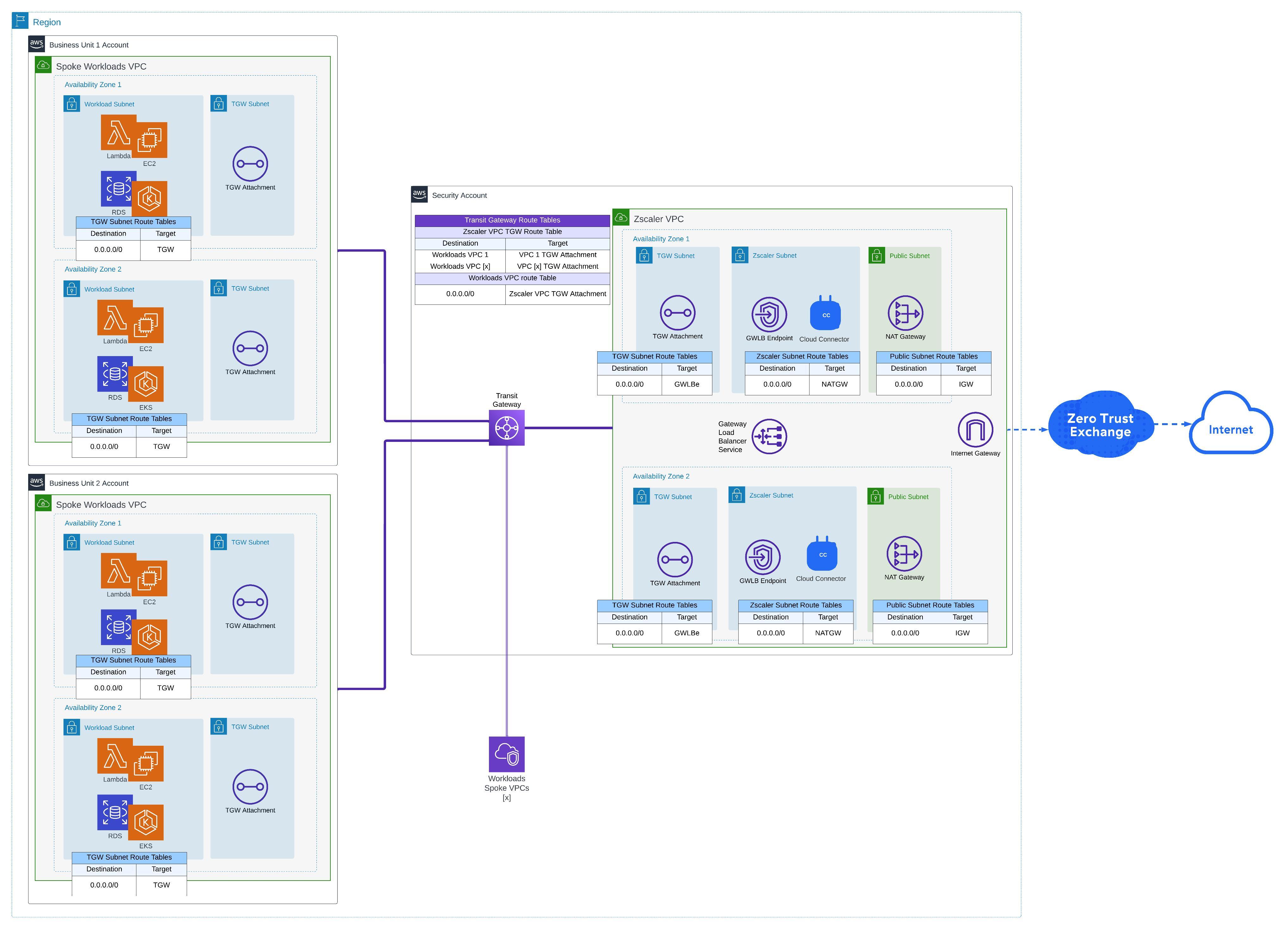

Diagram 1: A hub-and-spoke architecture example diagram with AWS Transit Gateways

Using a hub-and-spoke architecture, customers deploy Zscaler Cloud Connectors into (regional) security VPCs with internet access, usually via a NAT gateway, to the Zero Trust Exchange. The workloads, which reside in the spoke VPCs, are then connected to the security VPC, with Cloud Connectors tunneling egress traffic to the Zscaler Zero Trust Exchange. There are many AWS compute and operational benefits to using this model.

I also briefly introduced a hybrid approach. In this model, customers utilize an AWS Gateway Load Balancer and connect each isolated workload VPC to the security VPC via the GWLB endpoint rather than connecting the VPCs to a transit gateway. This is referred to as a Distributed (GWLB) Endpoint model.

Now, in part two, let’s dive deeper into the Isolated VPC topology. “One size fits all” does not apply here; namely, there’s no right or wrong answer today as to which topology is best. In my opinion, a fully isolated VPC topology is the clear winner.

So, in the market, why isn’t it a clear winner today? There are certain aspects that make it unfavorable from a security perspective. That said, as we will cover, there are hurdles that might prevent organizations from moving to a fully isolated VPC model today. It’s important to note that these disadvantages are generally applicable to large environments with hundreds of VPCs spread across many regions.

So What Exactly Does an Isolated VPC Mean?

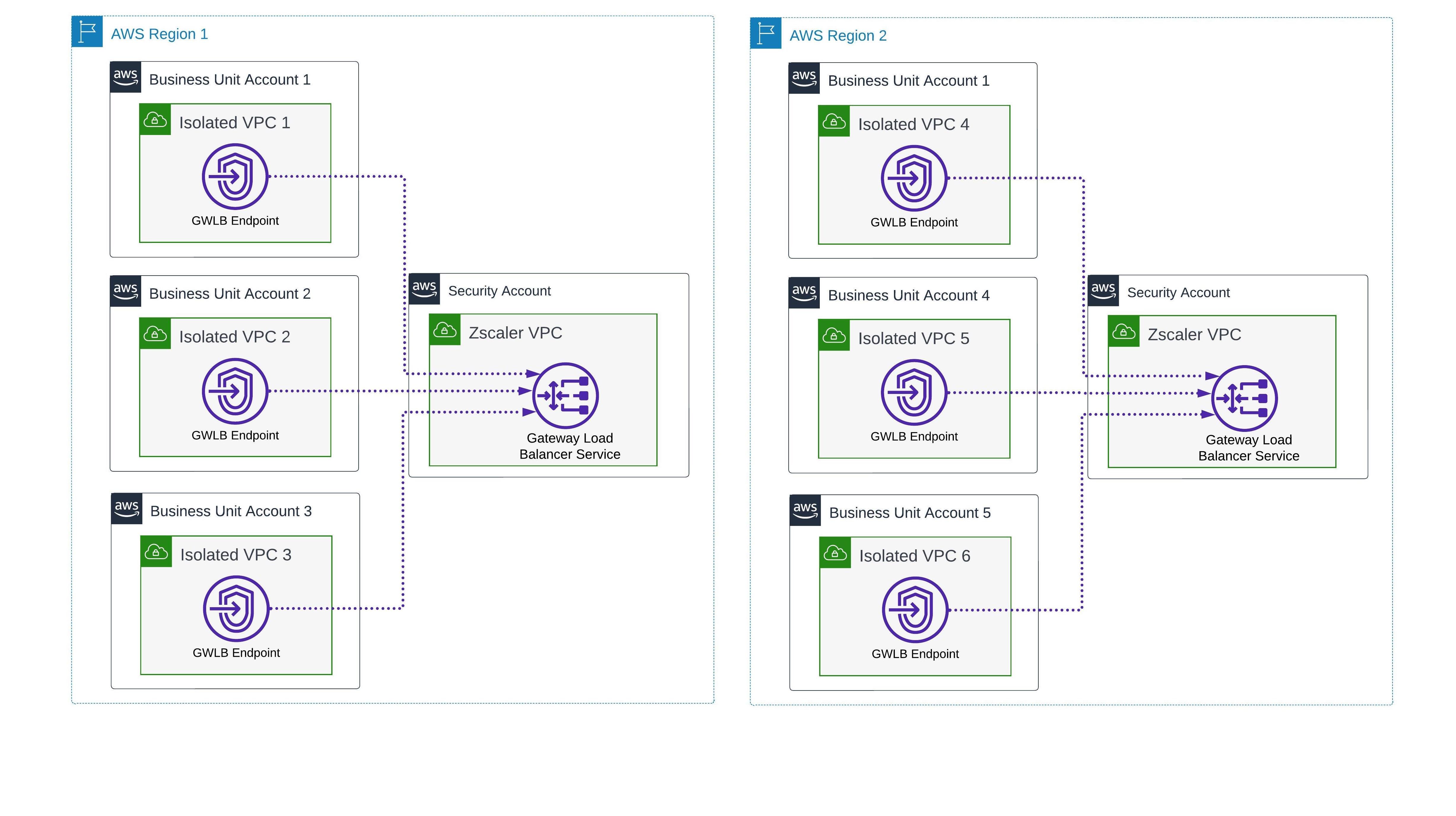

Diagram 2: Isolated VPCs have direct-to-internet connections and no inter-VPC connections

Let me clarify what I mean by isolated VPCs. I’m purely describing an AWS environment in which none of the VPCs have connectivity to one another—no transit gateway, no VPC peering, nothing.

Despite their challenges, adopting an isolated VPC topology can become a simpler decision when the following situations come up:

- Your organization starts to explore moving some workloads to a new cloud provider

- Your organization already has isolated VPCs that have no security

- Your organization is looking to rebuild its cloud environments

Diagram 2 shows that each VPC has its own internet connection. Now, there can be variations, such as VPCs with public subnets where each workload receives a public IP address and doesn’t require a NAT gateway. Although I’ve seen this, it’s more common for workloads to be in private subnets and only have very specific resources remain public. Usually, these public resources are bastion hosts, load balancers, WAFs, or other services that either allow for or help secure inbound internet connections. This could be your public facing website, for example. These workloads are business-critical, however, they don’t make up a majority of workloads we typically see when implementing Zscaler.

*Note: For the purposes of this article, I’m still focused on internet egress security. Keep in mind, a fully isolated VPC topology is generally possible when you can provide secure access to both internal and external resources. I’ll dive into those details in Part 3 of this blog series. Note as well, the Cloud Connector component integrates with Zscaler Private Access (ZPA) to provide secure access to private applications without connecting to the internet or routing between sources and destinations.

For context, one of the options I discuss is one where the AWS Gateway Load Balancer (GWLB) can enable a unique deployment topology we refer to as Distributed GWLB Endpoints. This is a unique AWS offering that is not like traditional load balancers. I highly recommend reading the following AWS articles to better understand GWLB:

Diagram 3: Example diagram with Distributed GWLB Endpoints across 2 AWS Regions without networking/VPC Connectivity between workloads and Zscaler VPC

An Isolated VPC Approach with Cloud Connectors

An interesting point of discussion in many of my workshops is the design and topology required by Zscaler. Technically, there’s no direct limitation or requirement from the Zscaler side. Such an approach will work as long as the desired workloads/VPCs are able to route to the respective GWLB VPC Endpoints connected to the Cloud Connectors.

That said, customers have a need to optimize and follow vendor best practice recommendations. In many cases, I will simply observe and gain an understanding of your current architecture so I may demonstrate where our components will be deployed. Let’s say, for the sake of argument, that your organization is already using isolated VPCs or has a desire to move to an isolated VPC model. If this decision has already been made on an environmental or enterprise scale, the question then becomes: how do you get all these environments secured?

There are currently two options I commonly see and recommend for isolated VPC deployments.

In both scenarios there’s no VPC connectivity, peering, transit gateway, or third-party network overlays that are really just site-to-site IPSEC tunnels to centralized VPCs.

Deciding which option to go with comes down to cost and operational fit:

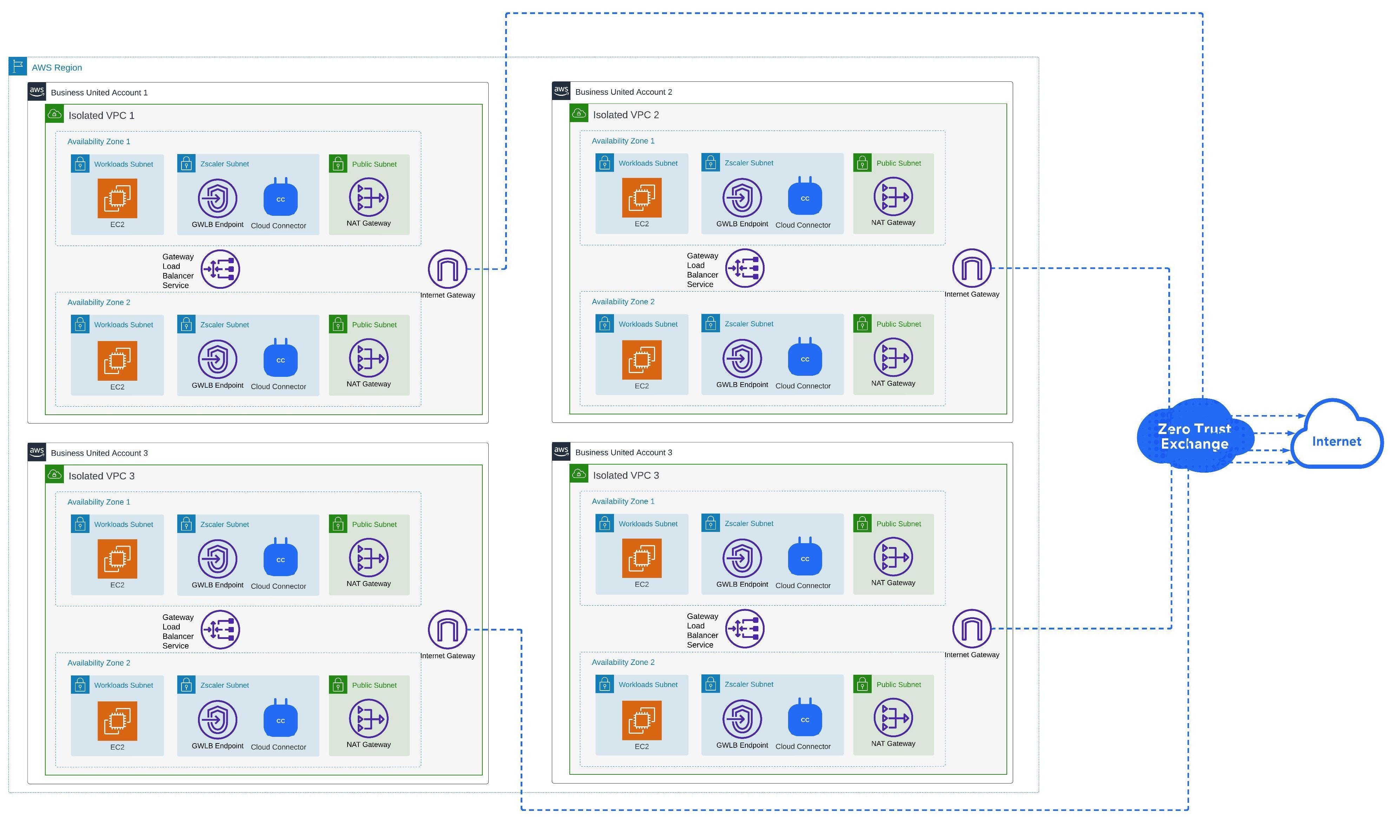

- Option 1: Deploy Cloud Connectors into each Workload VPC

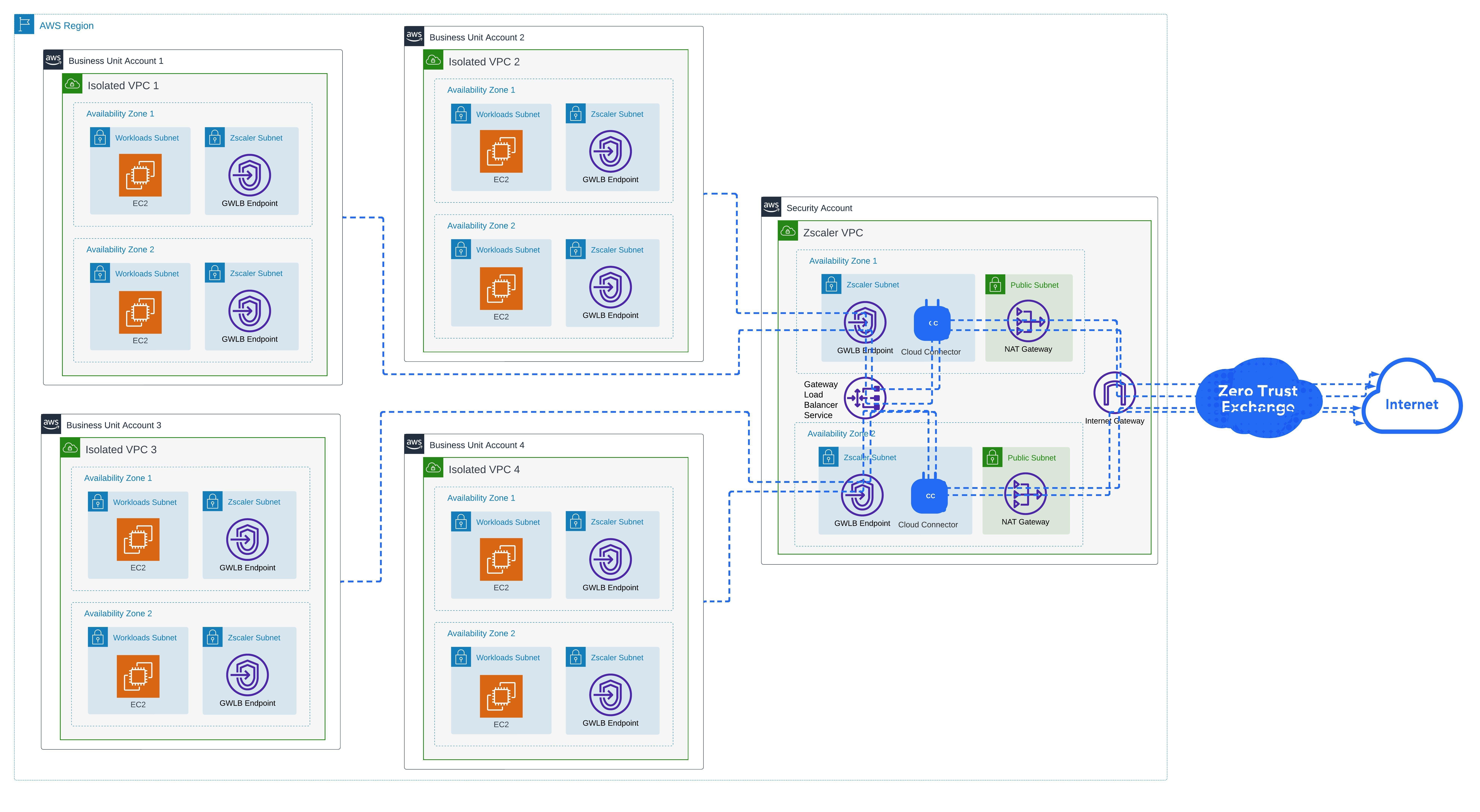

- Option 2: Deploy Cloud Connectors into a Regional Zscaler VPC and distribute GWLB Endpoints in each Workload VPC

As you’ll see in the diagrams below, the end result for both options is identical. Workloads route to Cloud Connectors to be securely tunneled to Zscaler Service Edges for inspection. Take a look at these diagrams for some of the major differences, then we’ll cover some more details after to help in the decision making process.

Please note, for simplicity I have added a dashed blue line to indicate outbound communication from each Workload VPC to the internet using two Availability Zones. It’s assumed that the workload subnets in these VPCs (EC2 instances, RDS instances, Lambda functions, EKS nodes, etc) are routing to Zscaler.

Diagram 4: Cloud Connectors with GWLB deployed into each VPC with 2 Availability Zones to secure all outbound-initiated workload traffic to the internet

Diagram 5: Zscaler Regional VPC with Cloud Connectors and GWLB Service, with the GWLB Endpoints deployed to all isolated Workload VPCs to secure outbound-initiated workload traffic to the internet

Ok, so which option should you go with? This requires a bit of discussion to understand all your requirements, but here is a matrix to help with the major differences. The first part explains the differences in AWS infrastructure and the second part of the matrix discusses the differences in Zscaler infrastructure:

|

Requirement |

Cloud Connectors in each VPC |

Centralized Cloud Connectors with Distributed GWLB Endpoints |

|

Each Workload VPC has Cloud Connector EC2 Instances and associated GWLB |

Yes |

No *Each AWS Region would have a Zscaler VPC dedicated to Cloud Connector EC2 instances and GWLB service |

|

Workload VPCs Require Internet Gateway |

Yes |

No |

|

Workload VPCs Require NAT Gateway |

Yes *Zscaler best practice but Cloud Connectors (without ASG) can be deployed with EIP to replace NAT gateways in certain circumstances |

No |

|

Workload VPCs Require additional subnets for Zscaler |

Yes |

No *However, many organizations deploy GWLB Endpoints into a unique subnet if there are many workload subnets in the VPC |

|

Each Workload VPC shows up in Zscaler Internet Access (ZIA) as a unique Location |

Yes |

No *However, sublocations can be created for each Workload VPC with GWLB Endpoints |

|

ZIA Policies for these Workloads can be applied using Locations, Sublocations, and/or the Workload Traffic Location Group |

Yes |

Yes |

|

All ZIA Security, inspection, threat protection, DLP, etc., can be applied |

Yes |

Yes |

Of course, you cannot make this decision without factoring in cost. To this end, let’s look at a hypothetical deployment and the potential differences in AWS costs based on the three common topologies.

I can’t stress this enough: Please use these calculations as directional guidance in order to compare the 3 topologies. The dollar amounts will vary on many factors, so it’s more productive to focus on percentage differences.

Cost Comparison Matrix example:

|

AWS Service Costs Annualized |

Cloud Connectors in each VPC |

Distributed GWLB Endpoints |

Transit Gateway Regional Hubs |

|

Cloud Connector EC2 On-Demand |

20 Cloud Connectors $13,706 |

2 Cloud Connectors $3,561 |

2 Cloud Connectors $3,561 |

|

GWLB Services and Endpoints |

10 Services $2,340 |

1 Service $763 |

1 Service 2 Endpoints |

|

Transit Gateways |

0 attachments $0 |

0 attachments $0 |

11 attachments $6,396 |

|

Total Annual AWS Cost |

$16,046 |

$4,324 |

$10,720 |

*Costs were calculated with https://calculator.aws on August 7, 2023 using the following:

- Region: us-east-1

- 10 Workload VPCs with 2 Availability Zones

- EC2 On-Demand Instance Pricing for c5.large instances

- 4TB/monthly Workloads traffic egress out of AWS

Is there a clear winner? From a pure AWS cost perspective, the Distributed GWLB Endpoint topology is 50-75% lower cost than other options! However, the reality is your organization’s existing AWS topology, operations, and process might already be tied to a specific option.

The operational cost of changing topologies without proper design and planning might exceed the cost of infrastructure cost saved. If your organization has invested in AWS Transit Gateways, as described in Part 1 of this blog series, I’m not suggesting you simply rip it out and move to a Distributed GWLB Endpoint model. Perhaps you prefer a hybrid approach, or, maybe you are looking to migrate over time. In any case, there are many possibilities!

Are there any caveats?

In short, yes. The biggest caveat to the isolated VPC topology is the lack of connectivity between VPCs. You could argue that this is actually the best thing to do from a security perspective and, in fact, falls in line with the principles of a zero trust architecture, but it’s still a critical operational aspect. How do you solve this, with or without Zscaler?

I’ll cover this in more detail in the next part of this blog series. As a quick teaser, I’ll just list out a few critical questions:

- Can you identify which AWS workloads need to communicate with private apps that aren’t in the same VPCs?

- Are there shared services environments that workloads in all VPCs must connect to?

- How does your team access the private workloads (SSH, RDP, etc.) in these VPCs?

- Are VPCs configured to use default AWS DNS or an internal DNS server?

- If you’re using AWS DNS, are you utilizing Private Hosted Zones or Outbound Resolvers?

As with all technology and design, this is not an all-or-nothing decision. Many organizations support multiple topologies based on a variety of project and security requirements. It all boils down to having a solid runbook that can operationalize your desired topologies and configurations… make it rinse and repeat!

What’s Next?

I always recommend signing up for our Workload Communications Self-Guided Workshop & Lab. This provides you with a (free) guided experience that shows you how to integrate Cloud Connectors and various workload policies in a self-contained environment.

Additionally, if you have a project for securing your public cloud workloads (AWS, Azure, GCP, etc.), please reach out to your Zscaler account team to schedule a discovery workshop with one of us!

At this point, you might be wondering: wouldn’t I still need a firewall to protect my east-west traffic? Stay tuned for Part 3 of this blog series as I will share my provocative thoughts on why this is mostly unnecessary.